Architecture

Deep dive into VoIPBIN’s internal architecture, microservices, communication patterns, and deployment.

Note

AI Context

This section provides comprehensive documentation of VoIPBIN’s system internals. Relevant when an AI agent needs to understand how the platform is built, how services communicate, or how infrastructure is deployed. For API usage, see the individual resource documentation pages.

Overview

Note

AI Context

This page describes VoIPBIN’s high-level system architecture, including the three major layers (API gateway, microservices, real-time communication) and core design principles. Relevant when an AI agent needs to understand the overall platform structure, service categories, or technology stack choices.

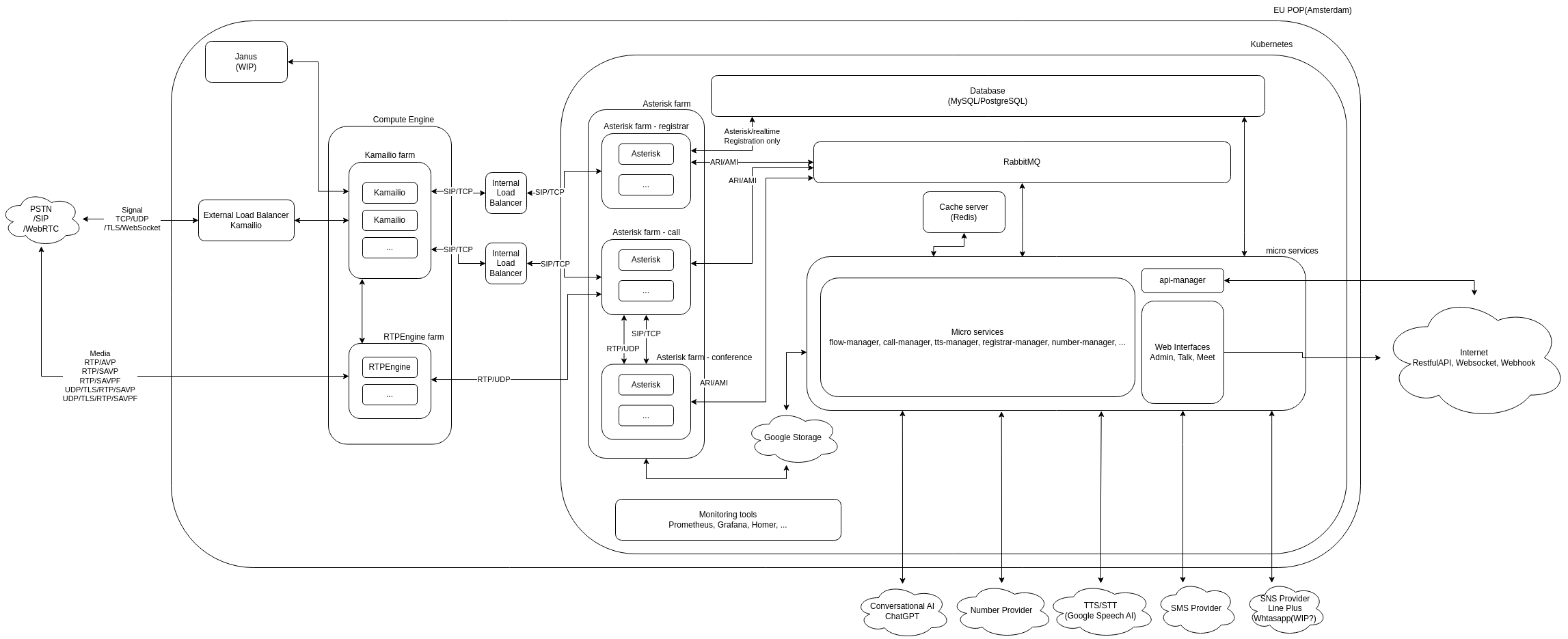

VoIPBIN is a cloud-native Communication Platform as a Service (CPaaS) built on modern microservices architecture. The platform provides comprehensive communication capabilities including PSTN calls, WebRTC, SMS, conferencing, AI-powered features, and workflow orchestration.

VoIPBIN is designed from the ground up for scalability, reliability, and developer productivity, enabling businesses to build sophisticated communication solutions through simple API calls.

High-Level System Architecture

VoIPBIN consists of three major architectural layers:

+----------------------------------------------------------------------+

| Client Applications |

| (Web Apps, Mobile Apps, Server-to-Server Integrations) |

+------------------------+---------------------------------------------+

| HTTPS/REST API

v

+----------------------------------------------------------------------+

| API Gateway Layer |

| (bin-api-manager) |

| o Authentication & Authorization |

| o Rate Limiting & Throttling |

| o Request Routing & Load Balancing |

+------------------------+---------------------------------------------+

| RabbitMQ RPC

v

+----------------------------------------------------------------------+

| Microservices Layer |

| +--------------+ +--------------+ +--------------+ |

| | Call Manager | | Flow Manager | | AI Manager | |

| +--------------+ +--------------+ +--------------+ |

| +--------------+ +--------------+ +--------------+ |

| |Chat Manager | | SMS Manager | |Queue Manager | |

| +--------------+ +--------------+ +--------------+ |

| +--------------+ +--------------+ +--------------+ |

| |Agent Manager | | Billing Mgr | |Webhook Mgr | |

| +--------------+ +--------------+ +--------------+ |

| ... 30+ services |

+------------------------+---------------------------------------------+

|

v

+----------------------------------------------------------------------+

| Real-Time Communication Layer |

| +--------------+ +--------------+ +--------------+ |

| | Kamailio | | Asterisk | | RTPEngine | |

| | (SIP Proxy) | |(Media Server)| |(Media Proxy) | |

| +--------------+ +--------------+ +--------------+ |

+----------------------------------------------------------------------+

+----------------------------------------------------------------------+

| Shared Infrastructure |

| o MySQL Database o Redis Cache o RabbitMQ o Kubernetes |

+----------------------------------------------------------------------+

Architectural Layers

1. API Gateway Layer

The API Gateway (bin-api-manager) serves as the single entry point for all external requests:

Authentication: JWT-based authentication for all API requests

Authorization: Permission checks based on customer and agent roles

Request Routing: Routes authenticated requests to appropriate backend services via RabbitMQ RPC

Protocol Translation: Converts HTTP/REST to internal RabbitMQ messaging

Response Aggregation: Collects responses from backend services and returns to clients

2. Microservices Layer

VoIPBIN consists of 30+ specialized Go microservices, organized by domain:

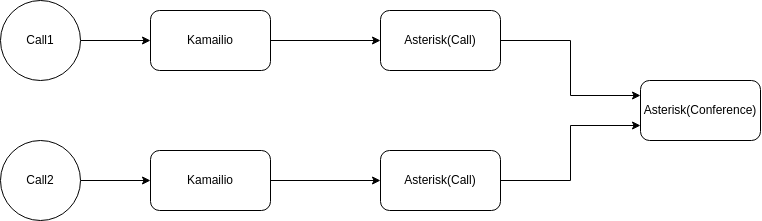

Communication Services: * bin-call-manager: Call lifecycle and routing * bin-conference-manager: Conference bridge management * bin-sms-manager: SMS messaging * bin-talk-manager: Real-time chat

AI Services: * bin-ai-manager: AI assistant, transcription, summarization * bin-transcribe-manager: Speech-to-text processing * bin-tts-manager: Text-to-speech synthesis

Workflow Services: * bin-flow-manager: Call flow orchestration and IVR * bin-queue-manager: Call queue management * bin-campaign-manager: Outbound campaign automation

Management Services: * bin-agent-manager: Agent state and presence * bin-billing-manager: Usage tracking and billing * bin-webhook-manager: Webhook delivery * bin-storage-manager: File and media storage

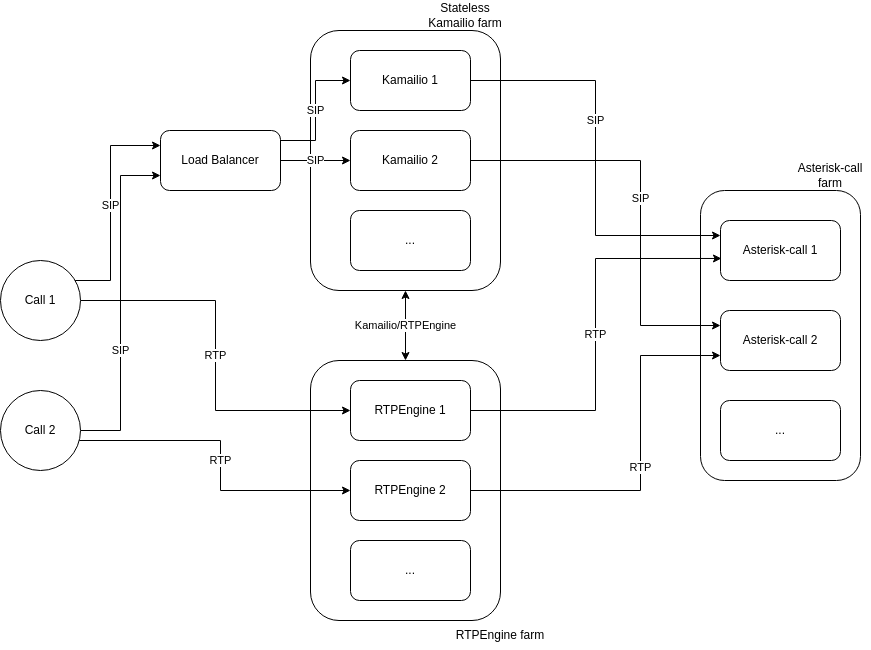

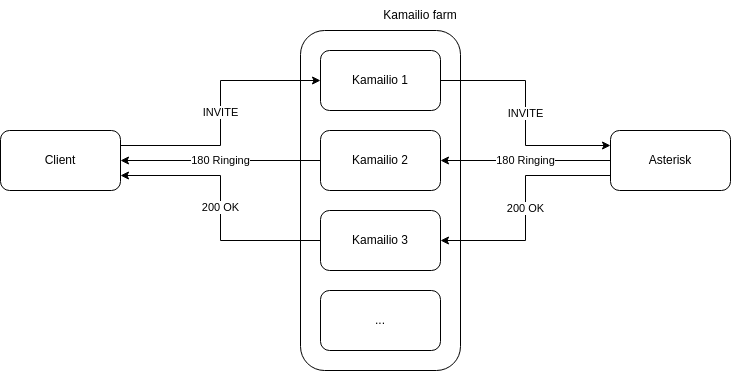

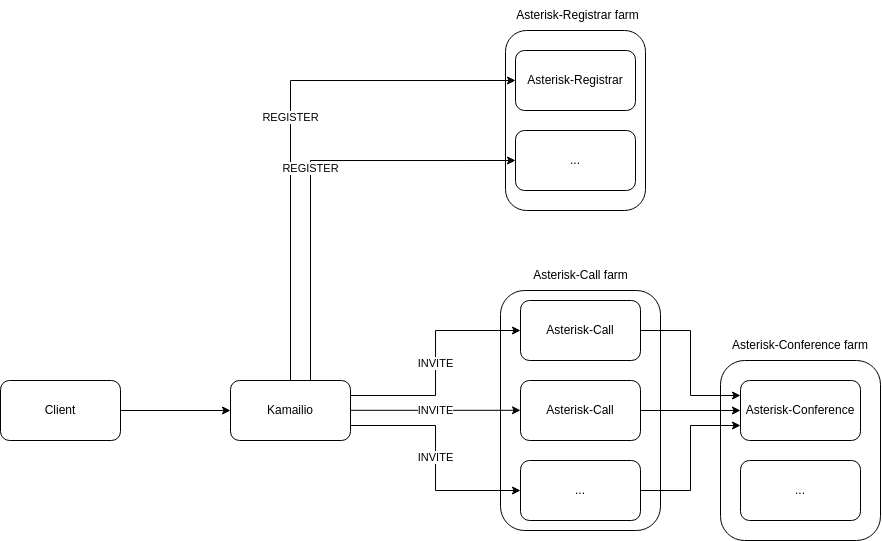

3. Real-Time Communication Layer

See RTC Architecture for detailed information about the VoIP stack.

Core Design Principles

VoIPBIN is designed around these key architectural principles:

Microservices Architecture

Service Isolation:

+------------+ +------------+ +------------+

| Service A | | Service B | | Service C |

| | | | | |

| o Domain | | o Domain | | o Domain |

| o Logic | | o Logic | | o Logic |

| o Data | | o Data | | o Data |

+------+-----+ +------+-----+ +------+-----+

| | |

+------------------+------------------+

Message Queue (RabbitMQ)

Domain Isolation: Each service owns its domain logic and data

Independent Deployment: Services can be deployed independently

Technology Flexibility: Services can use different technologies as needed

Fault Isolation: Failure in one service doesn’t cascade

Event-Driven Architecture

Event Flow:

+--------------+ Event +--------------+

| Service |----------------> | Message |

| (Publisher) | | Queue |

+--------------+ +-------+------+

|

+----------------+

| |

v v

+------------+ +------------+

| Subscriber | | Subscriber |

| Service A | | Service B |

+------------+ +------------+

Asynchronous Communication: Services communicate via events

Loose Coupling: Publishers don’t know about subscribers

Scalability: Multiple subscribers can process events in parallel

Reliability: Message queues provide guaranteed delivery

API Gateway Pattern

External Request Flow:

Client App API Gateway Backend Services

| | |

| HTTPS/REST | |

+---------------------------> |

| | 1. Authenticate |

| | 2. Authorize |

| | 3. Route Request |

| | |

| | RabbitMQ RPC |

| +--------------------------->

| | |

| | Response |

| <---------------------------+

| JSON Response | |

<---------------------------+ |

| | |

Single Entry Point: All external traffic goes through one gateway

Security Layer: Authentication and authorization at the edge

Protocol Translation: HTTP to internal messaging protocols

Service Discovery: Gateway knows how to reach all services

Shared Data Layer

Data Architecture:

+------------+ +------------+ +------------+

| Service | | Service | | Service |

| A | | B | | C |

+------+-----+ +-------+----+ +--------+---+

| | |

+----------------+----------------+

| | |

v v v

+-------------------------------------------+

| Redis Cache (Hot Data) |

+-------------------------------------------+

v v v

+-------------------------------------------+

| MySQL Database (Cold Data) |

+-------------------------------------------+

Shared MySQL: Single source of truth for all data

Redis Cache: Fast access to frequently used data

Consistent Schema: All services use common database schema

Transaction Support: ACID guarantees for critical operations

Communication Channels

VoIPBIN supports multiple communication channels through dedicated gateways:

Voice Communication:

PSTN: Traditional phone calls via carrier integrations

WebRTC: Browser-based voice and video calls

SIP: Direct SIP trunking for enterprise customers

Messaging:

SMS: Text messaging via carrier integrations

Chat: Real-time chat with WebSocket support

Email: Email notifications and campaigns

AI-Enhanced Communication:

AI Assistants: Voice-enabled AI agents for customer service

Transcription: Real-time and batch speech-to-text

Summarization: Call summarization and insights

Sentiment Analysis: Real-time emotion detection

Integration Capabilities

VoIPBIN provides multiple integration methods:

REST API:

Comprehensive REST API for all platform features

OpenAPI/Swagger documentation

SDKs for multiple languages

WebSocket:

Real-time event streaming

Bi-directional media streaming

Live transcription feeds

Webhooks:

Event notifications to external systems

Configurable retry policies

Signature verification for security

Direct Database Access:

Read replicas for reporting

Analytics database for business intelligence

Key Architectural Benefits

VoIPBIN’s architecture is designed to deliver these advantages:

Scalability

Horizontal Scaling: Add more service instances to handle increased load

Independent Scaling: Scale only the services that need more capacity

Auto-Scaling: Kubernetes automatically scales based on metrics

Global Distribution: Deploy services across multiple regions

Reliability

Fault Isolation: Issues in one service don’t affect others

Circuit Breakers: Prevent cascading failures

Automatic Failover: Kubernetes restarts failed containers

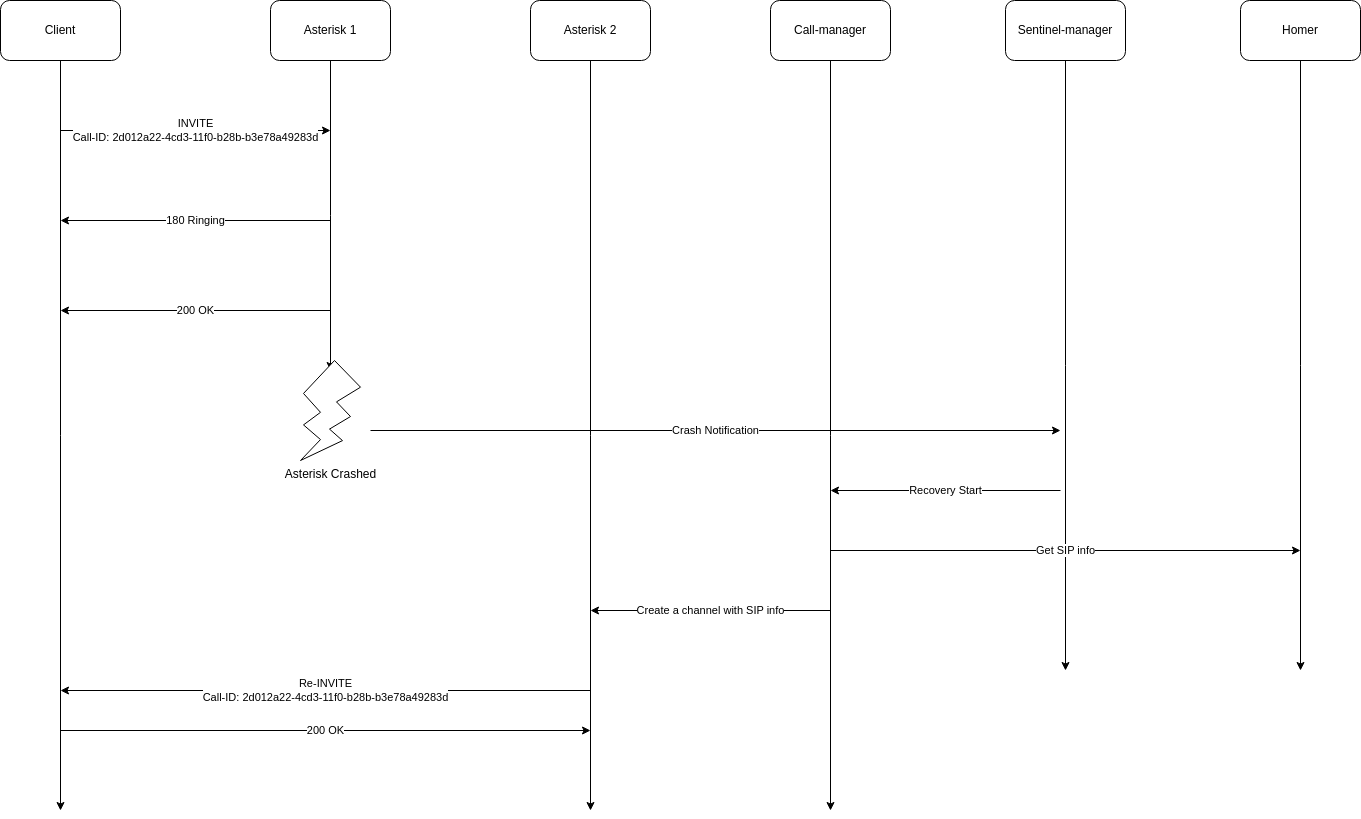

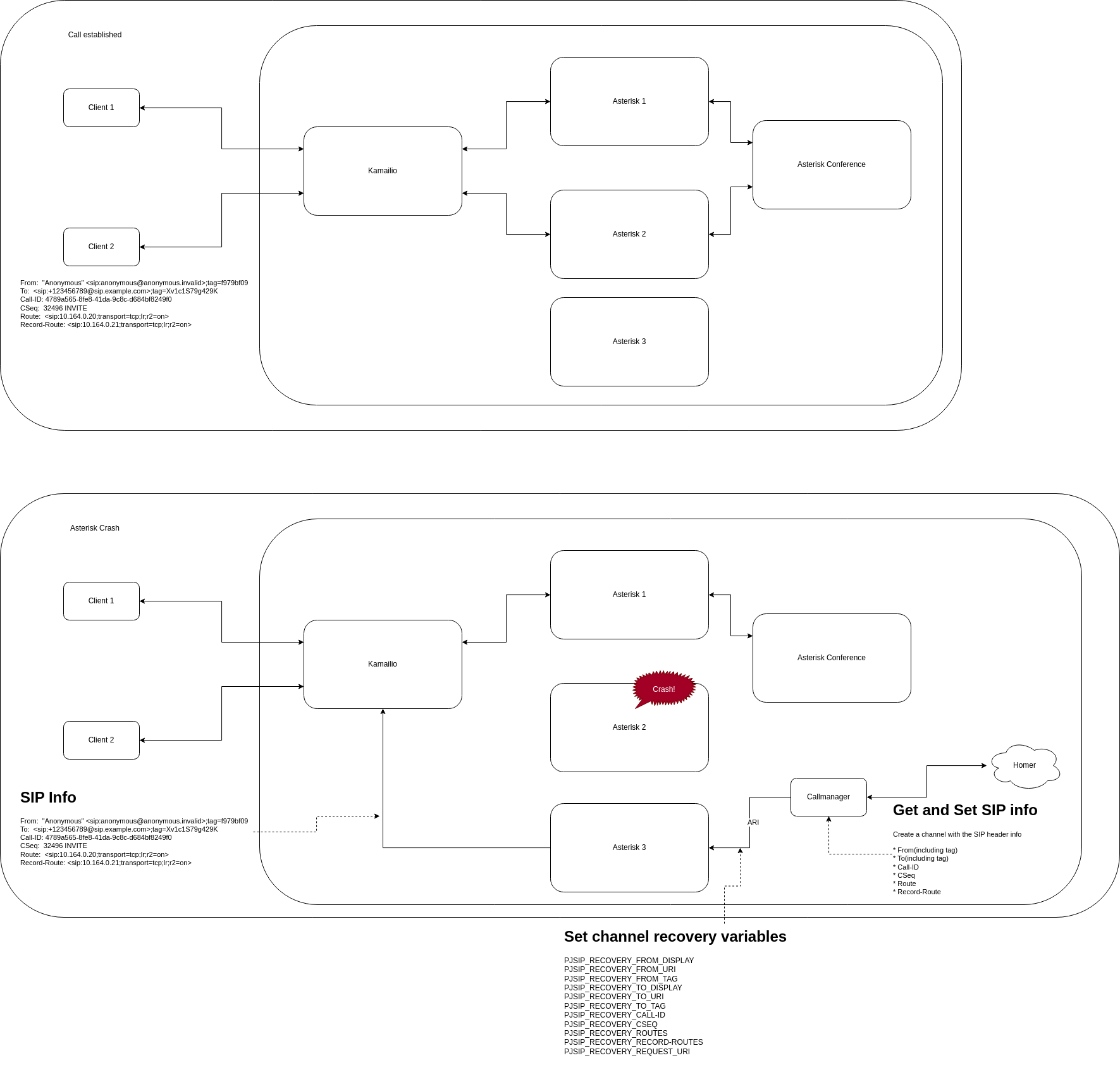

SIP Session Recovery: Maintain calls even when servers crash

Message Persistence: RabbitMQ ensures no messages are lost

Security

API Gateway Security: All authentication at the edge

Service Isolation: Services communicate via internal network only

Encryption: TLS for all external communication

Secret Management: Kubernetes secrets for sensitive data

Audit Logging: Complete audit trail of all operations

Developer Productivity

Simple REST API: Easy to integrate with any application

Comprehensive Docs: Detailed documentation with examples

Webhook Events: Real-time notifications of system events

Test Environment: Sandbox for development and testing

SDK Support: Official SDKs for popular languages

Operational Excellence

Centralized Logging: All logs aggregated in one place

Metrics & Monitoring: Prometheus metrics for all services

Distributed Tracing: Track requests across services

Health Checks: Automated health monitoring

Zero-Downtime Deploys: Rolling updates without service interruption

Service Dependencies

VoIPBIN services have well-defined dependencies for coordinated operations:

Core Service Dependencies:

+-----------------------------------------------------------------+

| bin-api-manager |

| (API Gateway) |

| ------------------------------------------------------------- |

| Depends on: ALL backend services for RPC routing |

+-----------------------------------------------------------------+

|

+-----------------------+-----------------------+

| | |

v v v

+-------------+ +-------------+ +-------------+

|bin-call-mgr | |bin-flow-mgr | |bin-ai-mgr |

+------+------+ +------+------+ +------+------+

| | |

| | |

v v v

+-------------+ +-------------+ +-------------+

|bin-billing | |bin-call-mgr | |bin-transcribe|

|bin-webhook | |bin-queue-mgr| |bin-tts-mgr |

|bin-number | |bin-ai-mgr | |bin-pipecat |

+-------------+ +-------------+ +-------------+

Key Dependency Patterns:

Call Processing Chain:

bin-call-manager

+--> bin-flow-manager (IVR and call flows)

+--> bin-billing-manager (usage tracking)

+--> bin-webhook-manager (event notifications)

+--> bin-transcribe-manager (call transcription)

+--> bin-number-manager (phone number lookup)

AI Voice Pipeline:

bin-pipecat-manager

+--> bin-ai-manager (LLM coordination)

+--> bin-call-manager (call control)

+--> bin-transcribe-manager (STT)

Flow Orchestration:

bin-flow-manager

+--> bin-call-manager (call actions)

+--> bin-queue-manager (queue operations)

+--> bin-ai-manager (AI interactions)

+--> bin-conference-manager (conference bridges)

Infrastructure Monitoring:

bin-sentinel-manager

+--> bin-call-manager (SIP session recovery events)

Circular Dependencies:

VoIPBIN avoids circular dependencies through:

Event-Driven Decoupling: Services publish events, others subscribe

Gateway Orchestration: API Gateway coordinates cross-service operations

Shared Data Layer: Services share data via MySQL, not direct calls

Technology Stack

VoIPBIN is built on modern, proven technologies:

Backend Services:

Language: Go (Golang) for all microservices

API Framework: Gin for HTTP routing

RPC: RabbitMQ for inter-service communication

Database: MySQL for persistent storage

Cache: Redis for session and hot data

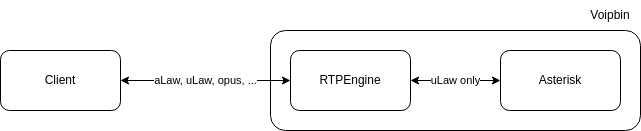

Real-Time Communication:

SIP Proxy: Kamailio for SIP routing

Media Server: Asterisk for call processing

Media Proxy: RTPEngine for RTP handling

Infrastructure:

Container Runtime: Docker for containerization

Orchestration: Kubernetes (GKE) for container management

Cloud Provider: Google Cloud Platform

Monitoring: Prometheus + Grafana for metrics

Logging: ELK stack for centralized logging

Message Queue:

Broker: RabbitMQ for async messaging

Event Bus: ZeroMQ for pub/sub events

This architecture enables VoIPBIN to deliver enterprise-grade communication services at scale while maintaining developer simplicity and operational excellence.

Backend Microservices

Note

AI Context

This page describes VoIPBIN’s 30+ Go microservices, their organization by domain, the API gateway (bin-api-manager), and special service architectures (pipecat hybrid Go/Python, sentinel Kubernetes monitoring). Relevant when an AI agent needs to understand which service handles a specific domain, the routing table from HTTP endpoints to backend services, or the authentication/authorization flow.

VoIPBIN’s backend consists of 30+ specialized Go microservices organized into functional domains. Each service owns its specific business logic and communicates with others through a message queue, enabling independent scaling, deployment, and development.

Microservices Organization

Services are organized by functional domain:

VoIPBIN Microservices Architecture

+-------------------------------------------------------------+

| Communication Services |

+-------------------------------------------------------------+

| bin-call-manager | Call lifecycle and routing |

| bin-conference-manager | Conference bridge management |

| bin-message-manager | SMS messaging (Telnyx/MsgBird) |

| bin-talk-manager | Real-time chat |

| bin-email-manager | Email campaigns |

| bin-transfer-manager | Call transfer operations |

+-------------------------------------------------------------+

+-------------------------------------------------------------+

| AI Services |

+-------------------------------------------------------------+

| bin-ai-manager | AI assistants and processing |

| bin-transcribe-manager | Speech-to-text transcription |

| bin-tts-manager | Text-to-speech synthesis |

| bin-pipecat-manager | Real-time AI voice (Go/Python) |

+-------------------------------------------------------------+

+-------------------------------------------------------------+

| Workflow Services |

+-------------------------------------------------------------+

| bin-flow-manager | Call flow and IVR orchestration |

| bin-queue-manager | Call queue management |

| bin-campaign-manager | Outbound campaign automation |

| bin-outdial-manager | Outbound dialing targets |

| bin-conversation-manager| Conversation tracking |

+-------------------------------------------------------------+

+-------------------------------------------------------------+

| Management Services |

+-------------------------------------------------------------+

| bin-agent-manager | Agent state and presence |

| bin-billing-manager | Usage tracking and billing |

| bin-customer-manager | Customer and API key management |

| bin-webhook-manager | Webhook delivery |

| bin-storage-manager | File, media, and recordings |

| bin-number-manager | Phone number management |

| bin-tag-manager | Customer tag management |

+-------------------------------------------------------------+

+-------------------------------------------------------------+

| Integration Services |

+-------------------------------------------------------------+

| bin-talk-manager | Agent UI backend |

| bin-hook-manager | External webhook gateway |

| bin-sentinel-manager | Kubernetes pod monitoring |

| bin-route-manager | Call routing and providers |

| bin-registrar-manager | SIP registration management |

+-------------------------------------------------------------+

Service Characteristics

Each microservice follows these design principles:

Domain Isolation

Service Boundary:

+----------------------------------------+

| bin-call-manager |

| |

| +----------------------------------+ |

| | Domain Logic (Call Handling) | |

| +----------------------------------+ |

| |

| +----------------------------------+ |

| | Data Access (Call Records) | |

| +----------------------------------+ |

| |

| +----------------------------------+ |

| | RPC Handlers (Message Queue) | |

| +----------------------------------+ |

+----------------------------------------+

Single Responsibility: Each service owns one specific domain

Encapsulated Logic: Business rules contained within the service

Data Ownership: Service owns its database tables and schema

Clear Boundaries: Well-defined interfaces and APIs

Technology Stack

All backend services share a common technology stack:

Language: Go (Golang) 1.21+

HTTP Framework: Gin for REST endpoints (when needed)

Database: MySQL 8.0 via sqlx

Cache: Redis 7.0 via go-redis

Message Queue: RabbitMQ via bin-common-handler

Logging: Structured logging with logrus

Monitoring: Prometheus metrics

Common Structure

All services follow a consistent directory structure:

bin-<service>-manager/

+-- cmd/

| +-- <service>-manager/

| +-- main.go # Entry point

+-- pkg/

| +-- <domain>handler/ # Business logic

| +-- dbhandler/ # Database operations

| +-- cachehandler/ # Redis operations

| +-- listenhandler/ # RabbitMQ RPC handlers

+-- models/

| +-- <resource>/ # Data models

+-- go.mod # Dependencies

API Gateway - bin-api-manager

The API Gateway serves as the single entry point for all external requests, handling authentication, authorization, and request routing to backend services.

Gateway Responsibilities

API Gateway Layer:

External Clients

(Web, Mobile, Server)

|

| HTTPS

v

+----------------------------------------+

| bin-api-manager |

| |

| 1. +----------------------------+ |

| | Authentication (JWT) | |

| +----------------------------+ |

| |

| 2. +-----------------------------+ |

| | Authorization (Permissions)| |

| +-----------------------------+ |

| |

| 3. +----------------------------+ |

| | Rate Limiting / Throttling| |

| +----------------------------+ |

| |

| 4. +----------------------------+ |

| | Request Routing (RabbitMQ)| |

| +----------------------------+ |

| |

| 5. +----------------------------+ |

| | Response Aggregation | |

| +----------------------------+ |

+----------------------------------------+

|

| RabbitMQ RPC

v

Backend Services

Authentication Flow

JWT Authentication:

Client API Gateway Backend Service

| | |

| POST /auth/login | |

+--------------------------->> |

| {user, pass} | |

| | |

| | Verify credentials |

| | |

| JWT Token | |

<<---------------------------+ |

| | |

| | |

| GET /calls?token=xyz | |

+--------------------------->> |

| | 1. Validate JWT |

| | 2. Extract customer_id |

| | 3. Check permissions |

| | |

| | RPC: GetCalls(ctx) |

| +------------------------->>

| | |

| | [Call List] |

| <<-------------------------+

| | |

| [Call List] | 4. Return response |

<<---------------------------+ |

| | |

Authentication Components:

JWT Validation: Validates token signature and expiration

Customer Extraction: Extracts customer_id from JWT claims

Permission Check: Verifies user has required permissions

Context Propagation: Passes auth context to backend services

Authorization Pattern

VoIPBIN implements authorization at the API Gateway, NOT in backend services:

Authorization Check:

+-----------------------------------------------------+

| bin-api-manager (Gateway) |

| |

| 1. Fetch Resource |

| +-------> bin-call-manager.GetCall(call_id) |

| | |

| 2. Check Authorization |

| | if call.customer_id != jwt.customer_id: |

| | return 404 (not 403, for security) |

| | |

| 3. Return Resource |

| +-------> return call |

| |

+-----------------------------------------------------+

+-----------------------------------------------------+

| bin-call-manager (Backend) |

| |

| o NO authentication logic |

| o NO customer_id validation |

| o Just process RPC requests |

| o Return requested data |

| |

+-----------------------------------------------------+

Key Authorization Principles:

Gateway-Only Auth: All authorization logic in bin-api-manager

Fetch-Then-Check: Fetch resource first, then verify ownership

Return 404, Not 403: Return “not found” for unauthorized access (security)

Backend Trust: Backend services trust the gateway

Request Routing

The gateway routes requests to appropriate backend services:

Routing Decision:

HTTP Request Gateway Router Backend Service

| | |

| GET /v1.0/calls | |

+--------------------->> |

| | Parse: "calls" |

| | -> bin-call-manager |

| | |

| | RPC Request |

| +----------------------->>

| | |

| | RPC Response |

| <<-----------------------+

| | |

| JSON Response | |

<<---------------------+ |

| | |

Routing Table:

HTTP Endpoint |

Backend Service |

|---|---|

/v1.0/calls |

bin-call-manager |

/v1.0/conferences |

bin-conference-manager |

/v1.0/messages |

bin-message-manager |

/v1.0/talks |

bin-talk-manager |

/v1.0/emails |

bin-email-manager |

/v1.0/agents |

bin-agent-manager |

/v1.0/queues |

bin-queue-manager |

/v1.0/campaigns |

bin-campaign-manager |

/v1.0/outdials |

bin-outdial-manager |

/v1.0/flows |

bin-flow-manager |

/v1.0/conversations |

bin-conversation-manager |

/v1.0/billings |

bin-billing-manager |

/v1.0/customers |

bin-customer-manager |

/v1.0/webhooks |

bin-webhook-manager |

/v1.0/transcribes |

bin-transcribe-manager |

/v1.0/numbers |

bin-number-manager |

/v1.0/routes |

bin-route-manager |

/v1.0/tags |

bin-tag-manager |

/v1.0/storage |

bin-storage-manager |

/v1.0/transfers |

bin-transfer-manager |

Special Service Architectures

Some services have unique architectures that differ from the standard microservice pattern:

bin-pipecat-manager (Hybrid Go/Python)

This service combines Go and Python for AI-powered voice conversations:

Hybrid Architecture:

+------------------------------------------------------------+

| bin-pipecat-manager |

| |

| Go Service (Port 8080) Python Service (Port 8000)|

| +---------------------+ +---------------------+ |

| | o RabbitMQ RPC | HTTP | o FastAPI server | |

| | o WebSocket server |<------>| o Pipecat pipelines | |

| | o Session lifecycle | | o STT/LLM/TTS | |

| | o Audiosocket (RTP) | | o Tool execution | |

| +----------+----------+ +---------------------+ |

| | |

+--------------|---------------------------------------------+

|

| Audiosocket (8kHz PCM)

v

Asterisk PBX

Audio Flow:

Asterisk (8kHz) --audiosocket--> Go --websocket/protobuf--> Python

<-----------------------

STT -> LLM -> TTS pipeline executed in Python/Pipecat

Key Features:

Dual Runtime: Go for infrastructure, Python for AI pipelines

Protobuf Frames: Efficient audio frame serialization

Sample Rate Conversion: 8kHz (Asterisk) ↔ 16kHz (AI services)

Tool Calling: LLM can invoke VoIP functions (connect_call, send_email)

bin-sentinel-manager (Kubernetes Monitoring)

This service monitors pod lifecycle events in Kubernetes:

Kubernetes Monitoring:

+-----------------------------------------------------------+

| Kubernetes Cluster (voip namespace) |

| |

| +------------+ +------------+ +------------+ |

| | asterisk- | | asterisk- | | asterisk- | |

| | call | | conference | | registrar | |

| +------+-----+ +------+-----+ +------+-----+ |

| | | | |

| +---------------+---------------+ |

| | |

| Pod Events (Update/Delete) |

| | |

| v |

| +-------------------------------+ |

| | bin-sentinel-manager | |

| | | |

| | o Pod informers (client-go) | |

| | o Label selector filtering | |

| | o Event publishing | |

| +---------------+---------------+ |

| | |

+-------------------------|---------------------------------+

|

| RabbitMQ Events

v

+-------------------+

| bin-call-manager |

| (SIP Recovery) |

+-------------------+

Key Features:

In-Cluster Monitoring: Uses Kubernetes client-go with RBAC

Label-Based Filtering: Watches specific pod labels (app=asterisk-*)

Event Publishing: Notifies services via RabbitMQ for recovery actions

Prometheus Metrics: Exports pod state change counters

SIP Session Recovery: Enables call-manager to recover sessions when pods crash

bin-hook-manager (Webhook Gateway)

This service receives external webhooks and routes them internally:

External Webhook Flow:

External Provider VoIPBIN Internal

(Telnyx, MessageBird) Services

| |

| HTTPS POST |

| /v1.0/hooks/messages |

v |

+-----------------+ |

| bin-hook-manager| |

| | RabbitMQ |

| o Validate +------------------------>| bin-message-manager

| o Parse | | bin-email-manager

| o Route | | bin-conversation-manager

+-----------------+ |

Key Features:

Public Endpoint: Receives webhooks from external providers

Message Routing: Forwards to internal services via RabbitMQ

Provider Support: Handles Telnyx, MessageBird delivery notifications

Thin Proxy: No business logic, just routing

Service Independence

VoIPBIN’s microservices architecture enables true service independence:

Independent Deployment

Service Deployment:

+--------------+ +--------------+ +--------------+

| Service A | | Service B | | Service C |

| v1.2.3 | | v2.0.1 | | v1.5.0 |

+------+-------+ +------+-------+ +------+-------+

| | |

| | Deploy v2.1.0 |

| | (no impact) |

| v |

| +--------------+ |

| | Service B | |

| | v2.1.0 | |

| +--------------+ |

| | |

+-----------------+-----------------+

Message Queue

No Downtime: Services update without affecting others

Version Independence: Each service has its own version

Gradual Rollout: Can deploy to subset of instances

Quick Rollback: Easy to revert problematic deployments

Independent Scaling

Horizontal Scaling:

Normal Load: High Call Load:

+----------+ +----------+ +----------+ +----------+

| Call | | Call | | Call | | Call |

| Manager | | Manager | | Manager | | Manager |

| x1 | | x1 | | x2 | | x3 |

+----------+ +----------+ +----------+ +----------+

+----------+ +----------+

| SMS | | SMS |

| Manager | | Manager |

| x1 | | x1 |

+----------+ +----------+

Scale only what needs scaling

Targeted Scaling: Scale only services experiencing load

Cost Optimization: Don’t over-provision underutilized services

Auto-Scaling: Kubernetes HPA scales based on metrics

Resource Efficiency: Better resource utilization

Independent Development

Development Isolation:

Team A Team B Team C

| | |

| bin-call- | bin-flow- | bin-ai-

| manager | manager | manager

| | |

| o Go codebase | o Go codebase | o Go codebase

| o Own git | o Own git | o Own git

| branch | branch | branch

| o Own CI/CD | o Own CI/CD | o Own CI/CD

| o Own tests | o Own tests | o Own tests

| | |

+-------------------+-------------------+

Coordinate only via:

o Message contracts

o Database schema

o API contracts

Team Autonomy: Teams work independently

Faster Development: No coordination bottleneck

Technology Flexibility: Can use different libraries

Clear Ownership: Each team owns specific domains

Service Communication Patterns

Services communicate primarily through RabbitMQ RPC:

Synchronous RPC (Request-Response)

RPC Communication:

API Gateway RabbitMQ Call Manager

| | |

| 1. Call Request | |

+------------------------>> |

| Queue: bin-manager. | |

| call.request | |

| | 2. Dequeue Request |

| +--------------------->>

| | |

| | 3. Process Request |

| | (create call) |

| | |

| | 4. Send Response |

| <<---------------------+

| 5. Response | |

<<------------------------+ |

| | |

Asynchronous Events (Pub/Sub)

Event Broadcasting:

Call Manager RabbitMQ Exchange Subscribers

| | |

| 1. Call Created | |

| (publish event) | |

+--------------------->> |

| | |

| | 2. Broadcast |

| | to all |

| +----------+------------+

| | | |

| | v v

| | +----------+ +----------+

| | | Billing | | Webhook |

| | | Manager | | Manager |

| | +----------+ +----------+

| | |

| | Process event |

| | independently |

Communication Patterns Used:

RPC (Synchronous): For request-response operations (GET, POST, DELETE)

Pub/Sub (Asynchronous): For event notifications (call.created, sms.sent)

Webhooks: For external system notifications

WebSocket: For real-time client updates

Service Discovery and Configuration

VoIPBIN uses a hybrid approach for service discovery:

Queue-Based Discovery

Service Registration:

+------------------------------------------------+

| RabbitMQ Queue Naming |

| |

| bin-manager.<service>.<operation> |

| |

| Examples: |

| o bin-manager.call.request |

| o bin-manager.conference.request |

| o bin-manager.sms.request |

| |

| Services listen on their named queues |

| Clients send to known queue names |

+------------------------------------------------+

Convention-Based: Queue names follow predictable pattern

No Registry: No central service registry needed

Self-Registering: Services create queues on startup

Load Balanced: Multiple instances share same queue

Configuration Management

Services receive configuration through multiple sources:

Configuration Sources:

+----------------+

| Service |

+----+-----------+

|

+-------> Environment Variables

| o Database connection

| o RabbitMQ address

| o Redis address

|

+-------> Command-Line Flags

| o Port number

| o Log level

|

+-------> bin-config-manager

| o Feature flags

| o Business logic config

|

+-------> Database

o Dynamic configuration

o Customer-specific settings

Health Monitoring

All services expose health check endpoints:

Health Check Architecture:

Kubernetes Service Health Dependencies

| | |

| 1. Health Check | |

+---------------------->> |

| GET /health | |

| | 2. Check MySQL |

| +---------------------->>

| | (ping) |

| | |

| | 3. Check Redis |

| +---------------------->>

| | (ping) |

| | |

| | 4. Check RabbitMQ |

| +---------------------->>

| | (connection) |

| | |

| 200 OK / 503 Error | |

<<----------------------+ |

| | |

| 5. Restart if failed | |

| (after retries) | |

Health Check Components:

Liveness Probe: Is the service running?

Readiness Probe: Is the service ready to accept traffic?

Dependency Checks: Are database, cache, queue healthy?

Auto-Recovery: Kubernetes restarts unhealthy pods

Error Handling and Resilience

Services implement multiple resilience patterns:

Circuit Breaker

Circuit Breaker States:

Closed (Normal) Open (Failed) Half-Open (Testing)

| | |

| Requests pass | Requests rejected | Limited requests

| through | immediately | allowed

| | |

| ------------> | --------X | ------------>

| | |

| If failures | After timeout | If success

| exceed threshold | period | threshold met

| | |

+---------------------->> |

<<----------------------+

| |

+---------------------->>

If still failing |

|

+------> Closed

Prevent Cascade Failures: Stop calling failed services

Fast Fail: Return error immediately when circuit open

Auto-Recovery: Periodically test if service recovered

Retry with Backoff

Exponential Backoff:

Attempt 1: Immediate

|

| Failed

v

Attempt 2: Wait 1s

|

| Failed

v

Attempt 3: Wait 2s

|

| Failed

v

Attempt 4: Wait 4s

|

| Failed

v

Attempt 5: Wait 8s

|

| Failed

v

Give up, return error

Transient Failures: Retry on temporary failures

Backoff Strategy: Increase wait time between retries

Max Attempts: Limit total number of retries

Idempotency: Ensure operations safe to retry

Timeouts

All RPC calls have strict timeouts:

Default Timeout: 30 seconds for most operations

Long Operations: 120 seconds for complex workflows

Streaming: No timeout for streaming operations

Context Propagation: Timeout passed through call chain

Deployment Architecture

Services deploy to Kubernetes on Google Cloud Platform:

Kubernetes Deployment:

+---------------------------------------------------------+

| GKE Cluster |

| |

| +---------------------------------------------------+ |

| | Namespace: production | |

| | | |

| | +---------------------------------------------+ | |

| | | Deployment: bin-call-manager | | |

| | | +---------+ +---------+ +---------+ | | |

| | | | Pod 1 | | Pod 2 | | Pod 3 | | | |

| | | +---------+ +---------+ +---------+ | | |

| | | Replicas: 3 HPA: 3-10 | | |

| | +---------------------------------------------+ | |

| | | |

| | +---------------------------------------------+ | |

| | | Deployment: bin-api-manager | | |

| | | +---------+ +---------+ +---------+ | | |

| | | | Pod 1 | | Pod 2 | | Pod 3 | | | |

| | | +---------+ +---------+ +---------+ | | |

| | | Replicas: 3 HPA: 3-20 | | |

| | +---------------------------------------------+ | |

| | | |

| | ... 30+ more deployments | |

| | | |

| +---------------------------------------------------+ |

| |

| +---------------------------------------------------+ |

| | Shared Resources (same cluster) | |

| | o MySQL StatefulSet | |

| | o Redis StatefulSet | |

| | o RabbitMQ StatefulSet | |

| | o Prometheus Monitoring | |

| +---------------------------------------------------+ |

+---------------------------------------------------------+

Deployment Characteristics:

Container-Based: Each service runs in Docker containers

Replica Sets: Multiple instances for high availability

Auto-Scaling: HPA (Horizontal Pod Autoscaler) based on CPU/memory

Rolling Updates: Zero-downtime deployments

Resource Limits: CPU and memory limits per container

Health Probes: Automatic restart of failed containers

Monitoring and Observability

Comprehensive monitoring across all services:

Metrics Collection

Metrics Pipeline:

Services Prometheus Grafana

(30+ services) | |

| | |

| Expose /metrics | |

| endpoint | |

| | |

| Scrape every 15s | |

+--------------------->> |

| | |

| | Time-series DB |

| | stores metrics |

| | |

| | Query metrics |

| +--------------------->>

| | |

| | Visualize |

| | dashboards |

| | |

Key Metrics:

Request Rate: Requests per second per service

Error Rate: Failed requests percentage

Latency: P50, P95, P99 response times

Resource Usage: CPU, memory, disk per pod

Queue Depth: RabbitMQ queue backlogs

Database Connections: Active connections per service

Logging

All services use structured logging:

{

"timestamp": "2026-01-20T12:00:00.000Z",

"level": "info",

"service": "bin-call-manager",

"instance": "pod-xyz",

"message": "Call created successfully",

"call_id": "abc-123-def",

"customer_id": "customer-789",

"duration_ms": 45

}

Structured Format: JSON logs for easy parsing

Centralized Collection: All logs aggregated in one place

Searchable: Full-text search across all services

Correlation IDs: Track requests across services

Best Practices

VoIPBIN’s backend follows these best practices:

Service Design:

One service, one responsibility

Services communicate via messages, not direct calls

Shared database, but logical isolation by tables

Idempotent operations for safe retries

Error Handling:

Always return errors, never panic

Use context for timeouts and cancellation

Implement circuit breakers for external dependencies

Log errors with full context

Performance:

Use connection pooling for database and Redis

Implement caching for frequently accessed data

Use batch operations where possible

Monitor and optimize hot paths

Security:

No authentication logic in backend services

Trust the API gateway for auth decisions

Validate all inputs at service boundaries

Use parameterized queries to prevent SQL injection

Testing:

Unit tests for business logic

Integration tests with mock dependencies

End-to-end tests for critical flows

Load tests before production deployment

Inter-Service Communication

Note

AI Context

This page describes VoIPBIN’s inter-service communication patterns: RabbitMQ RPC (synchronous request-response), RabbitMQ pub/sub (asynchronous events), ZeroMQ (high-performance real-time streaming), and WebSocket (client notifications). Relevant when an AI agent needs to understand how services talk to each other, message reliability guarantees, queue naming conventions, or event types.

VoIPBIN’s microservices communicate through multiple messaging patterns optimized for different use cases. The architecture uses RabbitMQ for RPC and pub/sub, ZeroMQ for high-performance events, and WebSocket for real-time client communication.

Communication Patterns Overview

VoIPBIN uses three primary communication mechanisms:

Communication Architecture:

+---------------------------------------------------------+

| RabbitMQ (Primary Bus) |

| |

| +-----------------------+ +-----------------------+ |

| | RPC (Synchronous) | | Pub/Sub (Async) | |

| | Request-Response | | Event Broadcasting | |

| +-----------------------+ +-----------------------+ |

+---------------------------------------------------------+

+---------------------------------------------------------+

| ZeroMQ (High-Performance Events) |

| |

| o Real-time event streaming |

| o Agent presence updates |

| o Call state changes |

+---------------------------------------------------------+

+---------------------------------------------------------+

| WebSocket (Client Communication) |

| |

| o Real-time client notifications |

| o Bi-directional media streaming |

| o Live transcription feeds |

+---------------------------------------------------------+

RabbitMQ RPC Pattern

VoIPBIN uses RabbitMQ for synchronous request-response communication between services.

RPC Flow

RPC Request-Response Pattern:

Client Service RabbitMQ Server Service

| | |

| 1. Send Request | |

| +------------+ | |

| | call_id | | |

| | action | | |

| | reply_to | | |

| +------------+ | |

+-------------------->> |

| Queue: bin-manager.| |

| call.request| |

| | 2. Dequeue |

| +---------------------->>

| | |

| | 3. Process Request |

| | (business logic) |

| | |

| | 4. Send Response |

| <<----------------------+

| | Queue: reply_to |

| 5. Receive Response| |

<<--------------------+ |

| +------------+ | |

| | status | | |

| | data | | |

| | error | | |

| +------------+ | |

| | |

Queue Naming Convention

All RPC queues follow a consistent naming pattern:

Queue Name Format:

bin-manager.<service>.<operation>

Examples:

o bin-manager.call.request -> bin-call-manager

o bin-manager.conference.request -> bin-conference-manager

o bin-manager.sms.request -> bin-sms-manager

o bin-manager.flow.request -> bin-flow-manager

o bin-manager.billing.request -> bin-billing-manager

Message Structure

RPC messages use a standardized JSON format:

Request Message:

{

"message_id": "uuid-v4",

"timestamp": "2026-01-20T12:00:00.000Z",

"route": "/v1/calls",

"method": "POST",

"headers": {

"customer_id": "customer-123",

"agent_id": "agent-456"

},

"body": {

"source": {"type": "tel", "target": "+15551234567"},

"destinations": [{"type": "tel", "target": "+15559876543"}]

}

}

Response Message:

{

"message_id": "uuid-v4",

"timestamp": "2026-01-20T12:00:01.000Z",

"status_code": 200,

"body": {

"id": "call-789",

"status": "ringing",

...

},

"error": null

}

RPC Implementation Pattern

Services implement RPC handlers following this pattern:

Service RPC Handler:

+------------------------------------------------+

| bin-call-manager |

| |

| 1. Listen on Queue |

| +- bin-manager.call.request |

| | |

| 2. Receive Message |

| +- Deserialize JSON |

| +- Validate request |

| | |

| 3. Route to Handler |

| +- Parse route: POST /v1/calls |

| +- Call: CallCreate(ctx, req) |

| | |

| 4. Execute Business Logic |

| +- Validate data |

| +- Create call record |

| +- Initiate SIP call |

| | |

| 5. Send Response |

| +- Serialize result |

| +- Reply to reply_to queue |

| |

+------------------------------------------------+

Load Balancing

Multiple service instances share the same queue:

Load Balanced RPC:

API Gateway Queue Service Instances

| | |

| Request 1 | |

+--------------------->> |

| +---------------------->> Instance 1

| | (round-robin) | (processes req 1)

| | |

| Request 2 | |

+--------------------->> |

| +---------------------->> Instance 2

| | (round-robin) | (processes req 2)

| | |

| Request 3 | |

+--------------------->> |

| +---------------------->> Instance 3

| | (round-robin) | (processes req 3)

| | |

Fair Distribution: RabbitMQ distributes messages evenly

No Coordination: Instances don’t need to know about each other

Dynamic Scaling: Add/remove instances without configuration

Automatic Recovery: If instance fails, messages redelivered

RabbitMQ Pub/Sub Pattern

For asynchronous event notifications, VoIPBIN uses RabbitMQ’s pub/sub (fanout exchange) pattern.

Pub/Sub Flow

Event Publishing Pattern:

Publisher Exchange Subscribers

| | |

| 1. Publish Event | |

| +------------+ | |

| |event: call | | |

| | .created| | |

| |data: {...} | | |

| +------------+ | |

+--------------------->> |

| Exchange: | |

| call.events | |

| | 2. Fanout to all |

| | subscribers |

| +------+----------------+

| | | |

| | v v

| | +--------+ +--------+

| | |Billing | |Webhook |

| | |Manager | |Manager |

| | +--------+ +--------+

| | | |

| | 3. Process 3. Process

| | event event

| | independently independently

Event Types

VoIPBIN publishes events for major state changes:

Event Categories:

Call Events:

o call.created - New call initiated

o call.ringing - Call ringing

o call.answered - Call answered

o call.ended - Call terminated

Conference Events:

o conference.created - Conference created

o conference.participant_joined

o conference.participant_left

o conference.ended

SMS Events:

o sms.sent - SMS sent successfully

o sms.delivered - SMS delivered to recipient

o sms.failed - SMS delivery failed

Agent Events:

o agent.login - Agent logged in

o agent.logout - Agent logged out

o agent.status_change - Agent status changed

Transcription Events:

o transcribe.started - Transcription started

o transcribe.completed

o transcript.created - New transcript segment

Event Message Structure

Event Message Format:

{

"event_id": "uuid-v4",

"event_type": "call.created",

"timestamp": "2026-01-20T12:00:00.000Z",

"customer_id": "customer-123",

"resource_type": "call",

"resource_id": "call-789",

"data": {

"id": "call-789",

"source": "+15551234567",

"destination": "+15559876543",

"status": "ringing",

...

}

}

Subscriber Pattern

Services subscribe to events they’re interested in:

Subscriber Implementation:

+------------------------------------------------+

| bin-billing-manager |

| |

| 1. Declare Exchange |

| +- call.events (fanout) |

| |

| 2. Create Queue |

| +- billing.call.events (unique) |

| |

| 3. Bind Queue to Exchange |

| +- Receive all events from exchange |

| |

| 4. Consume Events |

| +- call.created -> Track call start |

| +- call.answered -> Start billing |

| +- call.ended -> Calculate charges |

| +- Other events -> Ignore |

| |

+------------------------------------------------+

Event Processing Guarantees

Event Processing:

+--------------+

| Publish |

+------+-------+

|

| RabbitMQ persists event

| (survives broker restart)

v

+--------------+

| Deliver |

+------+-------+

|

| Subscriber processes

| (may retry on failure)

v

+--------------+

| ACK |

+--------------+

|

| Remove from queue

| (event processed successfully)

v

+--------------+

| Complete |

+--------------+

At-Least-Once Delivery: Events delivered at least once (may duplicate)

Persistent: Events survive broker restart

Manual ACK: Subscriber acknowledges after processing

Retry on Failure: Redelivered if subscriber crashes

ZeroMQ Event Streaming

For high-performance, low-latency event streaming, VoIPBIN uses ZeroMQ pub/sub sockets.

ZMQ Architecture

ZeroMQ Pub/Sub Pattern:

Publishers Subscribers

| |

| Call Manager |

| (publishes call events) |

+----------------------+ |

| ZMQ PUB Socket | |

| tcp://*:5555 | |

+----------+-----------+ |

| |

| Event Stream |

| (no broker) |

| |

+---------------------------->> Agent Manager

| | (agent presence)

| |

+---------------------------->> Webhook Manager

| | (webhook delivery)

| |

+---------------------------->> Talk Manager

| (agent UI updates)

Key Differences from RabbitMQ

RabbitMQ vs ZeroMQ:

RabbitMQ: ZeroMQ:

+------------+ +------------+

| Publisher | | Publisher |

+------+-----+ +------+-----+

| |

| Reliable | Fast

| Persistent | In-memory

| Broker-based | Direct socket

v v

+------------+ +------------+

| RabbitMQ | | Subscriber |

| Broker | | (Direct) |

+------+-----+ +------------+

|

| At-least-once

v

+------------+

| Subscriber |

+------------+

RabbitMQ: * Persistent, reliable * Guaranteed delivery * Message queuing * Higher latency (~10ms)

ZeroMQ: * In-memory, fast * Best-effort delivery * Direct sockets * Lower latency (<1ms)

Use Cases

VoIPBIN uses ZeroMQ for:

ZeroMQ Use Cases:

[x] Agent Presence Updates

o Agent login/logout

o Status changes (available, busy, away)

o Real-time UI updates

o High frequency, acceptable loss

[x] Call State Changes

o Call ringing, answered, ended

o Conference participant updates

o Duplicate with RabbitMQ (redundant)

o Speed over reliability

[x] Real-Time Metrics

o Queue statistics

o Active call counts

o System health metrics

o Dashboard updates

[ ] NOT Used For:

o Billing events (use RabbitMQ)

o Webhook delivery (use RabbitMQ)

o Critical state changes (use RabbitMQ)

ZMQ Message Format

ZMQ Message Structure:

Topic (routing key)

|

+- "agent.presence"

| {

| "agent_id": "agent-123",

| "status": "available",

| "timestamp": "2026-01-20T12:00:00.000Z"

| }

|

+- "call.state"

| {

| "call_id": "call-789",

| "status": "answered",

| "timestamp": "2026-01-20T12:00:01.000Z"

| }

|

+- "queue.stats"

{

"queue_id": "queue-456",

"waiting": 5,

"active": 3

}

Topic Filtering

Subscribers can filter events by topic:

Topic-Based Filtering:

Subscriber A:

o Subscribe to: "agent.*"

o Receives:

- agent.presence

- agent.login

- agent.logout

Subscriber B:

o Subscribe to: "call.*"

o Receives:

- call.state

- call.metrics

Subscriber C:

o Subscribe to: "" (empty = all)

o Receives: everything

WebSocket Communication

For real-time client communication, VoIPBIN uses WebSocket connections.

WebSocket Architecture

WebSocket Connection Flow:

Client (Browser/App) API Gateway Backend Services

| | |

| 1. HTTP Upgrade | |

| (WebSocket) | |

+--------------------->> |

| | 2. Authenticate |

| | (JWT token) |

| | |

| 3. Connection | |

| Established | |

<<---------------------+ |

| | |

| 4. Subscribe | |

| {"type":"subscribe",| |

| "topics":["..."]} | |

+--------------------->> |

| | 5. Register |

| | subscription |

| | |

| | 6. Backend Event |

| <<----------------------+

| | (via RabbitMQ/ZMQ) |

| | |

| 7. Push to Client | |

<<---------------------+ |

| {"event":"call. | |

| created",...} | |

| | |

Subscription Topics

Clients subscribe to specific event topics:

Topic Pattern:

customer_id:<id>:<resource>:<resource_id>

Examples:

o customer_id:123:call:*

-> All calls for customer 123

o customer_id:123:call:call-789

-> Specific call updates

o customer_id:123:agent:agent-456

-> Specific agent updates

o customer_id:123:queue:*

-> All queues for customer

o customer_id:123:conference:conf-999

-> Specific conference updates

WebSocket Use Cases

WebSocket Applications:

Agent Dashboard:

+--------------------------------------+

| o Real-time call notifications |

| o Queue status updates |

| o Agent presence |

| o Live chat messages |

+--------------------------------------+

Customer Portal:

+--------------------------------------+

| o Call status updates |

| o Campaign progress |

| o Billing updates |

| o System notifications |

+--------------------------------------+

Media Streaming:

+--------------------------------------+

| o Bi-directional audio (RTP) |

| o Live transcription feed |

| o Real-time metrics |

+--------------------------------------+

Connection Management

WebSocket Lifecycle:

+------------+

| Connect | Client establishes WebSocket

+------+-----+

|

v

+------------+

| Authenticate| Validate JWT token

+------+-----+

|

v

+------------+

| Subscribe | Client subscribes to topics

+------+-----+

|

v

+------------+

| Active | Bi-directional communication

| | o Server pushes events

| | o Client sends commands

| | o Pinger sends ping frames

+------+-----+

|

| (Keep-alive ping/pong)

|

v

+------------+

| Disconnect | Connection closed

+------------+

Keep-Alive Mechanism (Server-Side Ping/Pong)

VoIPBIN implements server-side keep-alive to prevent load balancer timeouts:

Keep-Alive Configuration:

+------------------------------------------------+

| Ping Interval: 30 seconds |

| Pong Wait: 60 seconds |

| Write Timeout: 10 seconds |

+------------------------------------------------+

Keep-Alive Flow:

Server Client

| |

| Every 30s: Send Ping Frame |

+---------------------------------------->>

| |

| Automatic Pong Response |

<<----------------------------------------+

| |

| Reset read deadline (60s) |

| |

Error Detection:

+------------------------------------------------+

| No pong within 60s -> Connection dead |

| Write failure -> Connection broken |

| Either error -> Close and cleanup |

+------------------------------------------------+

Keep-Alive Benefits:

Prevents Idle Drops: Load balancers see regular traffic

Dead Connection Detection: Server detects unresponsive clients

Automatic Cleanup: Zombie connections closed promptly

RFC 6455 Compliant: Uses standard WebSocket ping/pong frames

Connection Features:

Keepalive: Server-side ping every 30 seconds

Dead Detection: 60-second timeout for pong response

Auto-Reconnect: Client should reconnect on disconnect

Subscription Restore: Re-subscribe after reconnect

Write Protection: Mutex prevents concurrent write race conditions

Message Reliability

Different patterns provide different reliability guarantees:

Reliability Comparison:

Pattern Delivery Persistence Use Case

───────────────────────────────────────────────────────────

RabbitMQ RPC Exactly-once Yes Critical ops

(request-reply)

RabbitMQ Pub/Sub At-least-once Yes Important events

(may duplicate)

ZeroMQ Pub/Sub Best-effort No Real-time updates

(may lose)

WebSocket Best-effort No Client notifications

(may lose)

Reliability Patterns

Ensuring Reliability:

Critical Operations (RabbitMQ RPC):

+------------------------------------+

| o Persistent messages |

| o Manual acknowledgment |

| o Automatic retry |

| o Timeout handling |

| o Idempotent operations |

+------------------------------------+

Important Events (RabbitMQ Pub/Sub):

+------------------------------------+

| o Persistent messages |

| o Multiple subscribers |

| o Redundant processing OK |

| o Deduplication in subscriber |

+------------------------------------+

Real-Time Updates (ZeroMQ):

+------------------------------------+

| o No persistence |

| o Fast delivery |

| o Acceptable loss |

| o Often duplicated in RabbitMQ |

+------------------------------------+

Message Ordering

VoIPBIN guarantees ordering within specific boundaries:

Ordering Guarantees:

Same Queue: Different Queues:

+----------+ +----------+ +----------+

| Message 1| | Message 1| | Message 2|

+-----+----+ +-----+----+ +-----+----+

| | |

| Queue A | Queue A | Queue B

| | |

v v v

+----------+ +----------+ +----------+

| Message 2| | Service A| | Service B|

+-----+----+ +----------+ +----------+

| | |

| | May arrive in any order

v v v

+----------+ +----------+ +----------+

| Message 3| | Ordered | | No order |

+----------+ | delivery | | guarantee|

+----------+ +----------+

Ordered [x] Unordered [ ]

Ordering Strategy:

Within Queue: Messages delivered in order to same consumer

Across Queues: No ordering guarantee

Single Publisher: Maintains order if using single connection

Application Logic: Handle out-of-order messages when necessary

Error Handling and Retries

VoIPBIN implements comprehensive error handling:

Retry Strategy

Exponential Backoff Retry:

Attempt Delay Total Time

──────────────────────────────────

1 0s 0s

2 1s 1s

3 2s 3s

4 4s 7s

5 8s 15s

6 16s 31s

7 32s 63s

Max: 7 attempts, ~1 minute total

Dead Letter Queue

Failed messages move to dead letter queue for investigation:

Dead Letter Processing:

Normal Flow: Failed Flow:

+----------+ +----------+

| Message | | Message |

+-----+----+ +-----+----+

| |

| Process | Process (fails)

v v

+----------+ +----------+

| Success | | Retry |

+----------+ +-----+----+

| (max retries exceeded)

v

+----------+

| DLQ | Dead Letter Queue

+-----+----+

|

| Manual investigation

| or automated recovery

v

+----------+

| Alert |

+----------+

Error Categories

Error Handling by Type:

Transient Errors (Retry):

o Network timeout

o Database connection lost

o Service temporarily unavailable

-> Retry with exponential backoff

Permanent Errors (Don't Retry):

o Invalid data format

o Resource not found

o Permission denied

-> Send to DLQ, alert operator

Business Errors (Log and Return):

o Insufficient balance

o Invalid phone number

o Duplicate request

-> Return error to caller

Performance Optimization

VoIPBIN optimizes messaging performance:

Connection Pooling

Connection Management:

Service Instance

+------------------------------------+

| |

| Connection Pool (5 connections) |

| +----+ +----+ +----+ +----+ +----+|

| | 1 | | 2 | | 3 | | 4 | | 5 ||

| +-+--+ +-+--+ +-+--+ +-+--+ +-+--+|

| | | | | | |

+----+------+------+------+------+---+

| | | | |

+------+------+------+------+

|

| Single TCP connection

v

+----------+

| RabbitMQ |

+----------+

Reuse Connections: Don’t create per-request

Multiple Channels: Use channels for concurrency

Connection Limits: Pool size based on load

Health Checks: Monitor connection health

Batch Processing

For high-volume operations:

Batch vs Individual:

Individual Messages: Batch Processing:

+----+ +----+ +----+ +--------------+

| M1 | | M2 | | M3 | | M1, M2, M3 |

+-+--+ +-+--+ +-+--+ | M4, M5, M6 |

| | | | ... (100) |

v v v +------+-------+

Send 100 times Send once

(high overhead) (low overhead)

Bulk Publishing: Send multiple messages at once

Bulk ACK: Acknowledge multiple messages together

Reduced Overhead: Fewer network round-trips

Higher Throughput: 10x-100x improvement

Monitoring and Debugging

VoIPBIN monitors all communication channels:

Metrics

Message Queue Metrics:

Queue Depth:

+---------------------------------+

| Pending Messages |

| +--++--++--++--++--+ |

| |M1||M2||M3||M4||M5|... |

| +--++--++--++--++--+ |

+---------------------------------+

Alert if > 1000 messages

Processing Rate:

Messages/sec: ======== 850/s

Target: ======== 1000/s

Alert if < 500/s

Error Rate:

Failures: == 2%

Target: == < 5%

Alert if > 10%

Distributed Tracing

Track requests across services:

Trace ID: trace-123

1. API Gateway [50ms]

+- Authenticate [5ms]

+- Authorize [10ms]

+- Send RPC [35ms]

|

v

2. Call Manager [80ms]

+- Validate [10ms]

+- Create Record [20ms]

+- Initiate Call [50ms]

|

v

3. RTC Manager [120ms]

+- Setup Media [120ms]

Total: 250ms

Correlation IDs: Track requests across services

Timing: Measure latency at each hop

Errors: Identify where failures occur

Dependencies: Visualize service interactions

Best Practices

Message Design:

Keep messages small (<1MB)

Use JSON for human-readable format

Include timestamps for debugging

Add correlation IDs for tracing

Error Handling:

Always handle errors gracefully

Implement retry with exponential backoff

Use dead letter queues for failed messages

Alert on high error rates

Performance:

Use connection pooling

Batch messages when possible

Set appropriate timeouts

Monitor queue depths

Security:

Encrypt sensitive data in messages

Validate all incoming messages

Use authentication for connections

Limit message size to prevent abuse

Data Architecture

Note

AI Context

This page describes VoIPBIN’s data layer: shared MySQL database (schema organization, common table patterns, migrations via Alembic), Redis cache (cache-aside pattern, key naming, TTL strategies), and session management. Relevant when an AI agent needs to understand database schema conventions, caching strategies, data consistency models, or backup/recovery procedures.

VoIPBIN uses a shared data layer with MySQL for persistent storage and Redis for caching and session management. This architecture provides consistency across services while enabling high-performance data access.

Data Layer Overview

VoIPBIN’s data architecture consists of three layers:

Data Architecture:

+---------------------------------------------------------+

| Application Layer |

| (30+ Microservices) |

+--------------------+-------------------+----------------+

| |

| |

+---------------v------+ +--------v-----------+

| | | |

| Redis Cache | | MySQL Database |

| (Hot Data) | | (Persistent) |

| | | |

| o Sessions | | o All entities |

| o Frequently read | | o Relationships |

| o Temporary data | | o Audit logs |

| | | |

+----------------------+ +--------------------+

Cache-Aside Pattern:

1. Check cache first

2. If miss, query database

3. Store in cache for next time

MySQL Database

VoIPBIN uses a single shared MySQL database accessed by all services.

Database Characteristics

Shared Database Pattern:

+--------------+ +--------------+ +--------------+

| Service A | | Service B | | Service C |

| | | | | |

| call-mgr | | flow-mgr | | agent-mgr |

+------+-------+ +------+-------+ +------+-------+

| | |

| Connection | |

| Pooling | |

+--------+--------+-----------------+

|

v

+----------------------------+

| MySQL Database |

| |

| +----------------------+ |

| | calls table | |

| | conferences table | |

| | agents table | |

| | flows table | |

| | customers table | |

| | ... 100+ tables | |

| +----------------------+ |

+----------------------------+

Shared Schema: All services access same database

Logical Separation: Services own specific tables

ACID Transactions: Strong consistency guarantees

Connection Pooling: Each service maintains pool

Schema Organization

Tables are logically grouped by domain:

Table Organization:

Communication Domain:

o calls - Call records

o conferences - Conference bridges

o sms - SMS messages

o chats - Chat messages

o emails - Email records

Workflow Domain:

o flows - Call flow definitions

o flow_actions - Flow action steps

o queues - Call queues

o campaigns - Campaign definitions

Management Domain:

o customers - Customer accounts

o agents - Agent records

o billings - Billing records

o webhooks - Webhook configurations

o accesskeys - API keys

Resource Domain:

o numbers - Phone numbers

o recordings - Call recordings

o transcribes - Transcription jobs

o transcripts - Transcript segments

Common Table Pattern

All tables follow a consistent structure:

Standard Table Schema:

CREATE TABLE resource (

id VARCHAR(36) PRIMARY KEY, -- UUID

customer_id VARCHAR(36) NOT NULL, -- Ownership

-- Resource-specific fields

name VARCHAR(255),

status VARCHAR(50),

detail TEXT,

-- Timestamps

tm_create DATETIME(6) NOT NULL, -- Creation time

tm_update DATETIME(6) NOT NULL, -- Last update

tm_delete DATETIME(6) NOT NULL, -- Soft delete

-- Indexes

INDEX idx_customer (customer_id),

INDEX idx_status (status),

INDEX idx_tm_create (tm_create),

INDEX idx_tm_delete (tm_delete)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4;

Key Design Patterns:

UUID Primary Keys: Globally unique identifiers

Customer Ownership: Every resource has customer_id

Soft Deletes: tm_delete = ‘9999-01-01’ for active records

Microsecond Timestamps: DATETIME(6) for precise ordering

UTF8MB4: Full Unicode support including emojis

Data Access Patterns

Services access data through consistent patterns:

Data Access Flow:

Service Handler

|

| 1. Validate Input

v

+----------------------+

| Business Logic |

+------+---------------+

| 2. Check Cache

v

+----------------------+

| Cache Handler |

| (Redis) |

+------+---------------+

| Cache Miss

| 3. Query DB

v

+----------------------+

| DB Handler |

| (MySQL) |

+------+---------------+

| 4. Store in Cache

v

+----------------------+

| Return Result |

+----------------------+

Transaction Handling

VoIPBIN uses transactions for consistency:

Transaction Example:

BEGIN TRANSACTION

|

| 1. Create Call Record

+--> INSERT INTO calls ...

|

| 2. Update Customer Stats

+--> UPDATE customers SET total_calls = total_calls + 1 ...

|

| 3. Create Billing Entry

+--> INSERT INTO billings ...

|

| If all succeed:

| COMMIT

| If any fails:

| ROLLBACK

|

END TRANSACTION

ACID Guarantees: Atomic, Consistent, Isolated, Durable

Rollback on Error: All changes reverted if any step fails

Isolation Levels: READ COMMITTED for most operations

Lock Timeout: 30 seconds to prevent deadlocks

Query Optimization

VoIPBIN optimizes queries for performance:

Query Optimization Strategies:

1. Proper Indexing:

+---------------------------------+

| INDEX idx_customer_status |

| ON calls (customer_id, status) |

+---------------------------------+

SELECT * FROM calls

WHERE customer_id = ? AND status = 'active'

-> Uses index, fast lookup

2. Avoid SELECT *:

+---------------------------------+

| SELECT id, status, tm_create |

| FROM calls WHERE ... |

+---------------------------------+

-> Only retrieve needed columns

3. Pagination:

+---------------------------------+

| SELECT * FROM calls |

| WHERE customer_id = ? |

| LIMIT 50 OFFSET 0 |

+---------------------------------+

-> Limit result size

4. Connection Pooling:

+---------------------------------+

| Pool Size: 10-50 connections |

| Max Idle: 5 minutes |

| Max Lifetime: 1 hour |

+---------------------------------+

-> Reuse connections

Database Migrations

Schema changes are managed through Alembic migrations:

Migration Workflow:

Development Migration Script Production

| | |

| 1. Schema Change | |

| Needed | |

v | |

+-------------+ | |

| Create | | |

| Migration |------------------>| |

| Script | | |

+-------------+ | |

| | |

| 2. Test Locally | |

v | |

+-------------+ | |

| Run | | |

| Migration |<------------------| |

| (dev DB) | | |

+-------------+ | |

| | |

| 3. Commit to Git | |

v | |

+-------------+ | |

| Code Review | | |

| & Approval | | |

+-------------+ | |

| | |

| 4. Deploy | |

| | 5. Manual Execution |

| | (by human) |

| +-------------------------->>

| | |

| | alembic upgrade head |

| | |

Migration Best Practices:

Version Control: All migrations in git

Forward Only: Never modify existing migrations

Backward Compatible: Support gradual rollout

Manual Execution: Humans run migrations, not automation

Testing: Test on staging before production

Redis Cache

Redis provides fast access to frequently used data:

Cache Architecture

Redis Cache Pattern:

Application Request

|

| 1. Generate Cache Key

| key = "call:123"

v

+--------------------+

| Check Redis |

| GET call:123 |

+----+---------------+

|

+- Cache Hit --------+

| |

| v

| +----------------+

| | Return Cached |

| | Data (fast) |

| +----------------+

|

+- Cache Miss -------+

| |

| v

| +----------------+

| | Query MySQL |

| +----+-----------+

| |

| v

| +----------------+

| | Store in Redis |

| | SET call:123 |

| | EX 300 (5 min) |

| +----+-----------+

| |

| v

| +----------------+

| | Return Data |

| +----------------+

Cache Key Patterns

VoIPBIN uses structured cache keys:

Key Naming Convention:

<resource>:<id>[:<field>]

Examples:

o call:abc-123 -> Full call record

o agent:xyz-789:status -> Agent status only

o customer:customer-456 -> Customer record

o queue:queue-999:stats -> Queue statistics

o flow:flow-111:definition -> Flow definition

Advantages:

o Predictable keys

o Easy to invalidate

o Pattern matching for bulk operations

Data Structures

Redis supports multiple data structures:

Redis Data Structures:

1. String (Simple Values):

SET call:123:status "active"

GET call:123:status

-> "active"

2. Hash (Object Fields):

HSET call:123 status "active" duration "120"

HGET call:123 status

-> "active"

HGETALL call:123

-> {"status": "active", "duration": "120"}

3. List (Ordered Collection):

LPUSH queue:456:waiting call:123

LPUSH queue:456:waiting call:789

LRANGE queue:456:waiting 0 -1

-> [call:789, call:123]

4. Set (Unique Collection):

SADD conference:999:participants agent:111

SADD conference:999:participants agent:222

SMEMBERS conference:999:participants

-> [agent:111, agent:222]

5. Sorted Set (Scored Collection):

ZADD leaderboard 100 agent:111

ZADD leaderboard 95 agent:222

ZRANGE leaderboard 0 -1 WITHSCORES

-> [(agent:111, 100), (agent:222, 95)]

Cache Expiration

All cached data has Time-To-Live (TTL):

TTL Strategy:

Data Type TTL Reason

─────────────────────────────────────────────

Session tokens 1 hour Security

User profiles 5 min Frequently updated

Call records 1 min Real-time changes

Configuration 1 hour Rarely changes

Static data 24 hours Almost never changes

Set TTL:

SET key value EX 300 # 5 minutes

SETEX key 300 value # Same as above

EXPIRE key 300 # Set TTL on existing key

Cache Invalidation

VoIPBIN invalidates cache on updates:

Cache Invalidation Flow:

Update Request

|

| 1. Update Database

v

+--------------------+

| UPDATE calls |

| SET status='ended'|

| WHERE id='123' |

+----+---------------+

|

| 2. Invalidate Cache

v

+--------------------+

| DEL call:123 |

+----+---------------+

|

| 3. Return Success

v

+--------------------+

| Response to Client|

+--------------------+

Next Read:

o Cache miss

o Fetch from DB

o Store in cache with new data

Cache Patterns

Common Cache Patterns:

1. Cache-Aside (Read Through):

App checks cache -> Cache miss -> Query DB -> Store in cache

2. Write-Through:

App writes to cache -> Cache writes to DB -> Return success

3. Write-Behind (Async):

App writes to cache -> Return success -> Cache writes to DB later

VoIPBIN primarily uses Cache-Aside for simplicity and consistency.

Session Management

Redis stores session data for authenticated users:

Session Structure

Session Data in Redis:

Key: session:<token-hash>

Type: Hash

TTL: 1 hour (refreshed on activity)

Data:

+-------------------------------------+

| customer_id : customer-123 |

| agent_id : agent-456 |

| permissions : ["admin", "call"] |

| login_time : 2026-01-20 12:00 |

| last_activity : 2026-01-20 12:30 |

| ip_address : 192.168.1.100 |

| user_agent : Mozilla/5.0 ... |

+-------------------------------------+

Session Lifecycle

Session Flow:

1. Login:

+----------------------------+

| Generate JWT token |

| Hash token -> session_key |

| Store session in Redis |

| SET session:xyz {...} |

| EXPIRE session:xyz 3600 |

+----------------------------+

2. Request:

+----------------------------+

| Extract token from header |

| Hash token -> session_key |

| GET session:xyz |

| Validate session data |

| EXPIRE session:xyz 3600 | <- Refresh TTL

+----------------------------+

3. Logout:

+----------------------------+

| Extract token from header |

| Hash token -> session_key |

| DEL session:xyz |

+----------------------------+

Data Consistency

VoIPBIN ensures consistency across data layers:

Consistency Model

Consistency Strategy:

Strong Consistency: Eventual Consistency:

+--------------+ +--------------+

| MySQL | | Redis |

| (Source of | | (May be |

| Truth) | | stale) |

+------+-------+ +------+-------+

| |

| Always consistent | May lag behind

| ACID transactions | Best effort

| |

+----------+---------------+