AI

Configure AI-powered voice agents with speech-to-text (STT), text-to-speech (TTS), and conversational AI capabilities. AI agents can handle inbound and outbound calls autonomously using natural language processing.

API Reference: AI endpoints

Overview

Note

AI Context

Complexity: High

Cost: Chargeable (credit deduction per AI session based on LLM, TTS, and STT usage)

Async: Yes. AI sessions run asynchronously during calls. Monitor via

GET /calls/{id}or WebSocket events.

VoIPBIN’s AI is a built-in AI agent that enables automated, intelligent voice interactions during live calls. The AI integrates with multiple LLM providers (OpenAI, Anthropic, Gemini, and 15+ others), real-time speech processing, and tool functions to create dynamic, interactive voice experiences.

Note

AI Implementation Hint

AI is configured in two layers: (1) a reusable AI configuration resource created via POST /ais (defines LLM, TTS, STT, and tools), and (2) a flow action (ai_talk or ai) that references the AI configuration or provides inline settings. For quick prototyping, use inline flow actions. For production, create a reusable AI resource and reference it by ai_id.

How it works

Architecture Overview

VoIPBIN’s AI system consists of two main components working together: the AI Manager (Go) for orchestration and the Pipecat Manager (Python) for real-time audio processing.

+-----------------------------------------------------------------------+

| VoIPBIN AI Architecture |

+-----------------------------------------------------------------------+

+-------------------+

| Flow Manager |

| (ai_talk action) |

+--------+----------+

|

| Start AI session

v

+-------------------+ +-------------------+ +-------------------+

| | | | | |

| Asterisk |<------>| AI Manager |<------>| Pipecat Manager |

| (8kHz audio) | HTTP | (Go) | RMQ/WS | (Python) |

| | | | | |

+-------------------+ +--------+----------+ +--------+----------+

^ | |

| | |

| RTP audio | Tool | Real-time

| | execution | processing

v v v

+-------------------+ +-------------------+ +-------------------+

| User | | call-manager | | STT / LLM |

| (Phone) | | message-manager | | / TTS |

| | | email-manager | | Providers |

+-------------------+ +-------------------+ +-------------------+

Audio Flow

Audio flows through the system with sample rate conversion between components:

+-----------------------------------------------------------------------+

| Audio Flow |

+-----------------------------------------------------------------------+

User (Phone) VoIPBIN AI Providers

| | |

| RTP (8kHz PCM) | |

+------------------------------>| |

| | |

| +----------------+----------------+ |

| | | |

| v v |

| +---------------+ +------------------+ |

| | Asterisk | | Pipecat | |

| | (8kHz) |<------------>| (16kHz) | |

| +---------------+ WebSocket +------------------+ |

| | audio stream | |

| | v |

| | +------------------+ |

| | | Sample Rate | |

| | | Conversion | |

| | | 8kHz <-> 16kHz | |

| | +--------+---------+ |

| | | |

| | v |

| | +------------------+ |

| | | STT |--->|

| | | (Deepgram) | |

| | +------------------+ |

| | | |

| | | Text |

| | v |

| | +------------------+ |

| | | LLM |--->|

| | | (OpenAI/etc) | |

| | +------------------+ |

| | | |

| | | Response |

| | v |

| | +------------------+ |

| |<-------------------| TTS |<---|

| | Audio response | (ElevenLabs) | |

| | +------------------+ |

| | |

|<-------------+ |

| RTP audio playback |

| |

AI Call Lifecycle

An AI call goes through several stages from initialization to termination:

+-----------------------------------------------------------------------+

| AI Call Lifecycle |

+-----------------------------------------------------------------------+

1. INITIALIZATION

+-------------------+ +-------------------+

| Flow Manager |------->| AI Manager |

| (ai_talk) | | Start AIcall |

+-------------------+ +--------+----------+

|

v

+-------------------+ +-------------------+

| Pipecat |<-------| Creates session |

| Initializing | | in database |

+-------------------+ +-------------------+

2. PROCESSING (Real-time conversation)

+-------------------+ +-------------------+

| User |<------>| Pipecat |

| speaks/listens | | STT->LLM->TTS |

+-------------------+ +-------------------+

^ |

| v

| +-------------------+

| | Tool Execution |

| | (if triggered) |

| +-------------------+

| |

+----------------------------+

3. TERMINATION

+-------------------+ +-------------------+

| stop_service |------->| AI Manager |

| or hangup | | Terminate |

+-------------------+ +--------+----------+

|

v

+-------------------+

| Cleanup session |

| Save messages |

+-------------------+

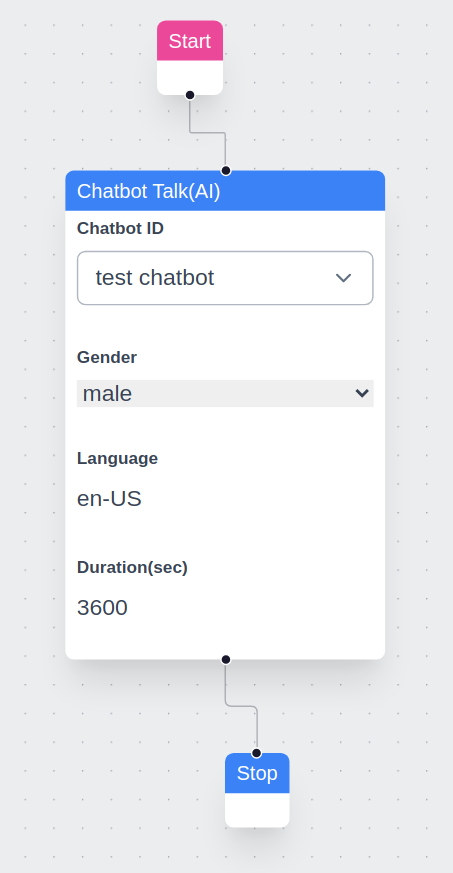

Action Component

The AI is integrated as a configurable action within VoIPBIN flows. When a call reaches an AI action, the system triggers the AI to generate responses based on the provided prompt.

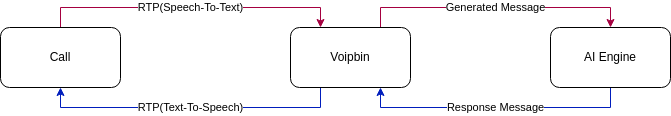

TTS/STT + AI Engine

VoIPBIN’s AI uses Speech-to-Text (STT) to convert spoken words into text, processes through the LLM, and Text-to-Speech (TTS) converts responses back to audio. This happens in real-time for seamless conversations.

Voice Detection and Play Interruption

VoIPBIN incorporates voice detection for natural conversational flow. While the AI is speaking (TTS playback), if the system detects the user’s voice, it immediately stops TTS and routes the user’s speech to STT and then to the LLM. This ensures user input is prioritized, enabling dynamic interaction that resembles real conversation.

+-----------------------------------------------------------------------+

| Voice Interruption Flow |

+-----------------------------------------------------------------------+

AI Speaking User Interrupts AI Listens

| | |

+---------v---------+ | |

| TTS audio plays | | |

| "I can help you | | |

| with that..." | | |

+-------------------+ | |

| | |

| <---- Voice detected ---->| |

| | |

+---------v---------+ | |

| STOP TTS | | |

| immediately | | |

+-------------------+ | |

| | |

+--------------------------->| |

| |

+--------v--------+ |

| User speaks: | |

| "Actually, I | |

| need help with | |

| something else"| |

+--------+--------+ |

| |

| STT -> LLM |

| |

+------------------------->|

+-------v-------+

| AI processes |

| new request |

+---------------+

Context Retention

VoIPBIN’s AI supports context saving. During a conversation, the AI remembers prior exchanges, allowing it to maintain continuity and respond based on earlier parts of the interaction. This provides a more natural and human-like dialogue experience.

Multilingual Support

VoIPBIN’s AI supports multiple languages. See supported languages: supported languages.

Tool Functions

AI tool functions enable the AI to take actions during conversations, such as transferring calls, sending messages, or managing the conversation flow.

Tool Execution Architecture

+-----------------------------------------------------------------------+

| Tool Execution Flow |

+-----------------------------------------------------------------------+

Step 1: User makes request

+-------------------+

| "Transfer me to |

| sales please" |

+--------+----------+

|

v

Step 2: Speech-to-Text

+-------------------+

| STT converts |

| audio to text |

+--------+----------+

|

v

Step 3: LLM Processing

+-------------------+

| LLM detects intent|

| Generates: |

| function_call: |

| connect_call |

+--------+----------+

|

v

Step 4: Tool Execution

+-------------------+ +-------------------+

| Python Pipecat |------->| Go AIcallHandler|

| sends HTTP POST | | ToolHandle() |

+-------------------+ +--------+----------+

|

v

+-------------------+

| Execute via |

| call-manager |

+--------+----------+

|

v

Step 5: Result returned

+-------------------+ +-------------------+

| Pipecat receives |<-------| Tool result |

| success/failure | | returned |

+--------+----------+ +-------------------+

|

v

Step 6: AI Response

+-------------------+

| LLM generates |

| "Connecting you |

| to sales now..." |

+--------+----------+

|

v

Step 7: TTS Playback

+-------------------+

| TTS converts to |

| audio, plays to |

| user |

+-------------------+

Available Tools

Tool |

Description |

|---|---|

connect_call |

Transfer or connect to another endpoint |

send_email |

Send an email message |

send_message |

Send an SMS text message |

stop_media |

Stop currently playing media |

stop_service |

End AI conversation (soft stop, flow continues) |

stop_flow |

Terminate entire flow (hard stop, call ends) |

set_variables |

Save data to flow context |

get_variables |

Retrieve data from flow context |

get_aicall_messages |

Get message history from an AI call |

For detailed documentation on each tool, see Tool Functions.

Configuring Tools

Tools are configured per-AI using the tool_names field (Array of String):

// Enable all tools

"tool_names": ["all"]

// Enable specific tools only

"tool_names": ["connect_call", "send_email", "stop_service"]

// Disable all tools (conversation-only)

"tool_names": []

Note

AI Implementation Hint

When using ["all"], the AI can invoke any available tool, including stop_flow which terminates the entire call. For customer-facing deployments, prefer listing specific tools explicitly to prevent unintended call terminations.

Using the AI

Initial Prompt

The initial prompt serves as the foundation for the AI’s behavior. A well-crafted prompt ensures accurate and relevant responses. There is no enforced limit to prompt length, but we recommend keeping this confidential to ensure consistent performance and security.

Example Prompt:

Pretend you are an expert customer service agent.

Please respond kindly.

AI Talk

AI Talk enables real-time conversational AI with voice in VoIPBIN, powered by high-quality TTS engines (ElevenLabs, Deepgram, OpenAI, etc.) for natural-sounding speech.

Key Features

Real-time Voice Interaction: AI generates responses in real-time based on user input and delivers them as speech.

Interruption Detection & Listening: If the user speaks while the AI is talking, the system immediately stops the AI’s speech and captures the user’s voice via STT. This ensures smooth, continuous conversation flow.

Low Latency Response: For longer prompts, AI Talk generates and plays speech in smaller chunks, reducing perceived response time for the user.

Multiple TTS/STT Providers: Support for ElevenLabs, Deepgram, OpenAI, and many other providers.

Tool Function Integration: AI can perform actions like call transfers, sending messages, and managing variables during conversation.

Built-in ElevenLabs Voice IDs

VoIPBIN uses a predefined set of voice IDs for various languages and genders. Here are the default ElevenLabs Voice IDs currently in use:

Language |

Male Voice ID (Name) |

Female Voice ID (Name) |

Neutral Voice ID (Name) |

|---|---|---|---|

English (Default) |

|

|

|

Japanese |

|

|

|

Chinese |

|

|

|

German |

|

|

|

French |

|

|

|

Hindi |

|

|

|

Korean |

|

|

|

Italian |

|

|

|

Spanish (Spain) |

|

|

|

Portuguese (Brazil) |

|

|

|

Dutch |

|

|

|

Russian |

|

|

|

Arabic |

|

|

|

Polish |

|

|

|

Other ElevenLabs Voice ID Options

VoIPBIN allows you to personalize the text-to-speech output by specifying a custom ElevenLabs Voice ID. By setting the voipbin.tts.elevenlabs.voice_id variable, you can override the default voice selection.

voipbin.tts.elevenlabs.voice_id: <Your Custom Voice ID>

See how to set the variables here.

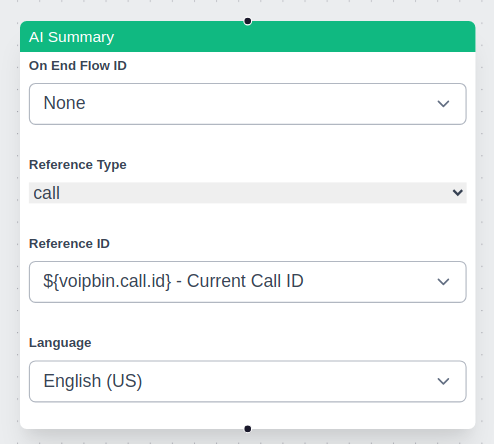

AI Summary

The AI Summary feature in VoIPBIN generates structured summaries of call transcriptions, recordings, or conference discussions. It provides a concise summary of key points, decisions, and action items based on the provided transcription source.

Supported Resources

AI summaries work with a single resource at a time. The supported resources are:

Real-time Summary: * Live call transcription * Live conference transcription

Non-Real-time Summary: * Transcribed recordings (post-call) * Recorded conferences (post-call)

Choosing Between Real-time and Non-Real-time Summaries

Developers must decide whether to use a real-time or non-real-time summary based on their needs:

Use Case |

Summary Type |

Recommendation |

|---|---|---|

Live call monitoring |

Real-time |

Use AI summary with a live call transcription |

Live conference insights |

Real-time |

Use AI summary with a live conference transcription |

Post-call analysis |

Non-real-time |

Use AI summary with transcribe_id from a completed call |

Recorded conference summary |

Non-real-time |

Use AI summary with recording_id |

AI Summary Behavior

The summary action processes only one resource at a time.

If multiple AI summary actions are used in a flow, each executes independently.

If an AI summary action is triggered multiple times for the same resource, it only returns the most recent segment.

In conference calls, the summary is unified across all participants rather than per speaker.

Ensuring Full Coverage

Since starting an AI summary action late in the call results in missing earlier conversations, developers should follow best practices: * Enable transcribe_start early: This ensures that transcriptions are available even if an AI summary action is triggered later. * Use transcribe_id instead of call_id: This allows summarizing a full transcription rather than just the latest segment. * For post-call summaries, use recording_id: This ensures that the full conversation is summarized from the recorded audio.

External AI Agent Integration

For users who prefer to use external AI services, VoIPBIN offers media stream access. This allows third-party AI engines to process voice data directly, enabling deeper customization and advanced AI capabilities.

MCP Server

A recommended open-source implementation is available here:

Common Scenarios

Scenario 1: Customer Service Agent

AI handles routine customer inquiries with tool actions.

Caller: "I want to check my order status"

|

v

+---------------------------+

| AI: "I'd be happy to help.|

| What's your order number?"|

+---------------------------+

|

v

Caller: "Order 12345"

|

v

+---------------------------+

| AI triggers tool: |

| get_variables(order_id) |

| -> Retrieves order data |

+---------------------------+

|

v

+---------------------------+

| AI: "Your order shipped |

| yesterday and will arrive |

| by Friday." |

+---------------------------+

Scenario 2: Appointment Scheduling

AI collects information and transfers to agent.

+------------------------------------------------+

| AI Interaction |

+------------------------------------------------+

| |

| AI: "Welcome! How can I help you today?" |

| |

| Caller: "I need to schedule an appointment" |

| |

| AI: "What day works best for you?" |

| |

| Caller: "Next Tuesday afternoon" |

| |

| AI: "Let me transfer you to our scheduling |

| team with this information." |

| |

| [Tool: set_variables(preferred_date, time)] |

| [Tool: connect_call(scheduling_queue)] |

| |

+------------------------------------------------+

Scenario 3: Interactive Voice Survey

AI collects survey responses with natural conversation.

AI Flow:

1. Greeting + consent

"This is a brief satisfaction survey. May I continue?"

2. Question 1 (scale)

"On a scale of 1-10, how satisfied are you?"

[Tool: set_variables(q1_score)]

3. Question 2 (open-ended)

"What could we improve?"

[Tool: set_variables(q2_feedback)]

4. Thank you + end

"Thank you for your feedback!"

[Tool: stop_service]

Best Practices

1. Prompt Design

Keep prompts clear and focused on specific tasks

Include examples of expected responses

Define the AI’s persona and tone

Specify what tools the AI should use and when

2. Tool Configuration

Enable only tools the AI needs for its task

Use

["all"]cautiously - prefer specific tool listsTest tool interactions thoroughly before deployment

Handle tool failures gracefully in prompts

3. Conversation Flow

Set appropriate timeouts for user responses

Use voice detection settings that match your use case

Enable context retention for multi-turn conversations

Plan exit paths (transfer, end call, escalation)

4. Audio Quality

Choose TTS voices appropriate for your language/region

Test audio quality across different phone networks

Consider latency when selecting STT/TTS providers

Use 16kHz providers for better quality when possible

Troubleshooting

Note

AI Implementation Hint

When diagnosing AI call issues, check these endpoints in order: (1) GET /calls/{id} to verify call status and hangup reason, (2) GET /activeflows/{id} to check flow execution state, (3) WebSocket events for real-time error notifications.

Common HTTP Errors

- 400 Bad Request:

Cause: Invalid

engine_modelformat. Must be<provider>.<model>(e.g.,openai.gpt-4o).Fix: Verify the format matches the provider table in Engine Models.

- 402 Payment Required:

Cause: Insufficient account balance for AI session (LLM + TTS + STT costs).

Fix: Check balance via

GET /billing-accounts. Top up before retrying.

- 404 Not Found:

Cause: The

ai_iddoes not exist or belongs to a different customer.Fix: Verify the UUID was obtained from

GET /aisorPOST /ais.

- 500 Internal Server Error:

Cause: LLM provider API key is invalid or the provider is unavailable.

Fix: Verify

engine_keyis correct. Check the provider’s status page.

Audio Issues

Symptom |

Solution |

|---|---|

No audio from AI |

Check Pipecat connection; verify TTS provider credentials; check audio routing |

Choppy or delayed audio |

Check network latency; try different TTS provider; verify sample rate conversion |

User not heard |

Check STT configuration; verify microphone audio is reaching the system |

AI Response Issues

Symptom |

Solution |

|---|---|

AI gives wrong answers |

Review and refine prompt; add examples; check context length limits |

AI doesn’t use tools |

Verify tool_names configuration; check tool descriptions in prompt; review LLM response |

Tool execution fails |

Check tool handler logs; verify target service (call-manager, etc.) is available |

Connection Issues

Symptom |

Solution |

|---|---|

AI session won’t start |

Check AI Manager connectivity; verify Pipecat is running; check database connection |

Session drops unexpectedly |

Check timeout settings; review AI Manager logs for errors; verify WebSocket stability |

AI

AI

{

"id": "<string>",

"customer_id": "<string>",

"name": "<string>",

"detail": "<string>",

"engine_model": "<string>",

"parameter": "<object>",

"engine_key": "<string>",

"init_prompt": "<string>",

"tts_type": "<string>",

"tts_voice_id": "<string>",

"stt_type": "<string>",

"tool_names": ["<string>"],

"tm_create": "<string>",

"tm_update": "<string>",

"tm_delete": "<string>"

}

id(UUID): The AI configuration’s unique identifier. Returned when creating an AI viaPOST /aisor when listing AIs viaGET /ais.customer_id(UUID): The customer that owns this AI configuration. Obtained from theidfield ofGET /customers.name(String, Required): A human-readable name for the AI configuration (e.g.,"Sales Assistant").detail(String, Optional): A description of the AI’s purpose or additional notes.engine_model(String, Required): The LLM provider and model. Format:<provider>.<model>(e.g.,openai.gpt-4o,anthropic.claude-3-5-sonnet). See Engine Models.parameter(Object, Optional): Custom key-value parameter data for the AI configuration. Supports flow variable substitution at runtime. Typically left as{}.engine_key(String, Required): The API key for the LLM provider. Must be a valid key from the provider’s dashboard.init_prompt(String, Required): The system prompt that defines the AI’s behavior, persona, and instructions. No enforced length limit.tts_type(enum string, Required): Text-to-Speech provider. See TTS Types.tts_voice_id(String, Optional): Voice ID for the selected TTS provider. If omitted, the default voice for the chosen TTS type is used. See default voices in TTS Types.stt_type(enum string, Required): Speech-to-Text provider. See STT Types.tool_names(Array of String, Optional): List of enabled tool functions. Use["all"]to enable all tools,[]to disable all tools, or list specific tool names. See Tool Functions.tm_create(String, ISO 8601): Timestamp when the AI configuration was created.tm_update(String, ISO 8601): Timestamp when the AI configuration was last updated.tm_delete(String, ISO 8601): Timestamp when the AI configuration was deleted, if applicable.

Note

AI Implementation Hint

The engine_key field contains the LLM provider’s API key. This key is write-only: it is accepted on POST /ais and PUT /ais but is never returned in GET responses for security. If you need to change the key, send a full PUT update with the new key.

Note

AI Implementation Hint

A tm_delete value of 9999-01-01 00:00:00.000000 indicates the AI configuration has not been deleted and is still active. This sentinel value is used across all VoIPBIN resources to represent “not yet occurred.”

Example

{

"id": "a092c5d9-632c-48d7-b70b-499f2ca084b1",

"customer_id": "5e4a0680-804e-11ec-8477-2fea5968d85b",

"name": "Sales Assistant AI",

"detail": "AI assistant for handling sales inquiries",

"engine_model": "openai.gpt-4o",

"parameter": {},

"engine_key": "sk-...",

"init_prompt": "You are a friendly sales assistant. Help customers find the right products.",

"tts_type": "elevenlabs",

"tts_voice_id": "EXAVITQu4vr4xnSDxMaL",

"stt_type": "deepgram",

"tool_names": ["connect_call", "send_email", "stop_service"],

"tm_create": "2024-02-09 07:01:35.666687",

"tm_update": "9999-01-01 00:00:00.000000",

"tm_delete": "9999-01-01 00:00:00.000000"

}

Engine Model

The engine_model field specifies which LLM provider and model to use. Format: <provider>.<model>.

Supported Providers

Provider |

Format |

Examples |

|---|---|---|

OpenAI |

|

openai.gpt-4o, openai.gpt-4o-mini |

Anthropic |

|

anthropic.claude-3-5-sonnet |

AWS Bedrock |

|

aws.claude-3-sonnet |

Azure OpenAI |

|

azure.gpt-4 |

Cerebras |

|

cerebras.llama3.1-8b |

DeepSeek |

|

deepseek.deepseek-chat |

Fireworks |

|

fireworks.llama-v3-70b |

Google Gemini |

|

gemini.gemini-1.5-pro |

Grok |

|

grok.grok-1 |

Groq |

|

groq.llama3-70b-8192 |

Mistral |

|

mistral.mistral-large |

NVIDIA NIM |

|

nvidia.llama3-70b |

Ollama |

|

ollama.llama3 |

OpenRouter |

|

openrouter.meta-llama/llama-3-70b |

Perplexity |

|

perplexity.llama-3-sonar-large |

Qwen |

|

qwen.qwen-max |

SambaNova |

|

sambanova.llama3-70b |

Together AI |

|

together.meta-llama/Llama-3-70b |

Dialogflow |

|

dialogflow.cx, dialogflow.es |

Common OpenAI Models

Model |

Description |

|---|---|

gpt-4o |

Latest GPT-4 Omni model (recommended) |

gpt-4o-mini |

Smaller, faster GPT-4 Omni variant |

gpt-4-turbo |

GPT-4 Turbo with vision capabilities |

gpt-4 |

Original GPT-4 model |

gpt-3.5-turbo |

Fast and cost-effective model |

o1 |

OpenAI o1 reasoning model |

o1-mini |

Smaller o1 reasoning model |

o3-mini |

Latest o3 mini reasoning model |

TTS Type

Text-to-Speech provider for converting AI responses to audio.

Type |

Description |

|---|---|

elevenlabs |

ElevenLabs high-quality voice synthesis (recommended) |

deepgram |

Deepgram Aura voices |

openai |

OpenAI TTS (alloy, echo, fable, etc.) |

aws |

AWS Polly voices |

azure |

Azure Cognitive Services TTS |

Google Cloud Text-to-Speech |

|

cartesia |

Cartesia TTS |

hume |

Hume AI emotional TTS |

playht |

PlayHT voice synthesis |

Default Voice IDs by TTS Type

TTS Type |

Default Voice ID |

|---|---|

elevenlabs |

EXAVITQu4vr4xnSDxMaL (Rachel) |

deepgram |

aura-2-thalia-en (Thalia) |

openai |

alloy |

aws |

Joanna |

azure |

en-US-JennyNeural |

en-US-Wavenet-D |

|

cartesia |

71a7ad14-091c-4e8e-a314-022ece01c121 |

STT Type

Speech-to-Text provider for converting incoming audio to text.

Type |

Description |

|---|---|

deepgram |

Deepgram speech recognition (recommended) |

cartesia |

Cartesia speech recognition |

elevenlabs |

ElevenLabs speech recognition |

Tool Names

The tool_names field controls which tool functions the AI can invoke during conversations.

Configuration Options

Value |

Description |

|---|---|

|

Enable all available tool functions |

|

Disable all tool functions (AI can only converse) |

|

Enable only the specified tools |

Available Tools

See Tool Functions for the complete list of tools and their descriptions.

Example configurations:

// Enable all tools

"tool_names": ["all"]

// Enable only call transfer and email

"tool_names": ["connect_call", "send_email"]

// Enable conversation control tools only

"tool_names": ["stop_service", "stop_flow", "set_variables"]

// Disable all tools (conversation-only AI)

"tool_names": []

Tool Functions

Tool functions enable the AI to perform actions during voice conversations. When the AI determines that an action is needed based on the conversation context, it can invoke the appropriate tool function.

Note

AI Implementation Hint

Tool functions are invoked by the LLM, not by your application code. You configure which tools are available via the tool_names field in the AI configuration (POST /ais or inline flow action). The LLM decides when to call a tool based on conversation context and the tool’s parameter schema. Your prompt should describe when each tool should be used.

Overview

Caller AI Engine VoIPBIN Platform

| | |

| "Transfer me to sales" | |

+--------------------------->| |

| | |

| | Detects intent |

| | Invokes connect_call |

| +----------------------------->|

| | |

| | Tool result|

| |<-----------------------------+

| | |

| "Connecting you now..." | |

|<---------------------------+ |

| | |

+-------------- Call transferred to sales ----------------->|

Available Tools

Tool Name |

Description |

|---|---|

connect_call |

Transfer or connect to another endpoint |

send_email |

Send an email message |

send_message |

Send an SMS text message |

stop_media |

Stop currently playing media |

stop_service |

End the AI conversation (soft stop) |

stop_flow |

Terminate the entire flow (hard stop) |

set_variables |

Save data to flow context |

get_variables |

Retrieve data from flow context |

get_aicall_messages |

Get message history from an AI call |

connect_call

Connects or transfers the user to another endpoint (person, department, or phone number).

Note

AI Implementation Hint

The connect_call tool creates a new outgoing call and bridges it with the current call. The source.type determines the caller ID shown to the destination. For PSTN transfers, use type: "tel" with an E.164 phone number (e.g., +15551234567). For internal transfers, use type: "extension" with the extension name.

When to use:

Caller requests a transfer: “transfer me to…”, “connect me to…”

Caller wants to speak to a person: “let me talk to a human”, “I need an agent”

Caller requests a specific department: “sales”, “support”, “billing”

Caller provides a phone number: “call +1234567890”

Parameters:

{

"type": "object",

"properties": {

"run_llm": {

"type": "boolean",

"description": "Set true to speak after connecting. Set false for silent transfer.",

"default": false

},

"source": {

"type": "object",

"properties": {

"type": { "type": "string", "description": "agent, conference, extension, sip, or tel" },

"target": { "type": "string", "description": "Source address/identifier" },

"target_name": { "type": "string", "description": "Display name (optional)" }

},

"required": ["type", "target"]

},

"destinations": {

"type": "array",

"items": {

"type": "object",

"properties": {

"type": { "type": "string", "description": "agent, conference, extension, line, sip, tel" },

"target": { "type": "string", "description": "Destination address" },

"target_name": { "type": "string", "description": "Display name (optional)" }

},

"required": ["type", "target"]

}

}

},

"required": ["destinations"]

}

Examples:

"Transfer me to sales" -> type="extension", target="sales"

"Call my wife at 555-1234" -> type="tel", target="+15551234"

"I need a human agent" -> type="agent", target=appropriate agent

send_email

Sends an email to one or more email addresses.

When to use:

Caller explicitly requests email: “email me”, “send me an email”

Caller asks for documents to be emailed

Caller provides an email address for receiving information

Parameters:

{

"type": "object",

"properties": {

"run_llm": {

"type": "boolean",

"description": "Set true to confirm verbally after sending.",

"default": false

},

"destinations": {

"type": "array",

"items": {

"type": "object",

"properties": {

"type": { "type": "string", "enum": ["email"] },

"target": { "type": "string", "description": "Email address" },

"target_name": { "type": "string", "description": "Recipient name (optional)" }

},

"required": ["type", "target"]

}

},

"subject": { "type": "string", "description": "Email subject line" },

"content": { "type": "string", "description": "Email body content" },

"attachments": {

"type": "array",

"items": {

"type": "object",

"properties": {

"reference_type": { "type": "string", "enum": ["recording"] },

"reference_id": { "type": "string", "description": "UUID of the attachment" }

}

}

}

},

"required": ["destinations", "subject", "content"]

}

send_message

Sends an SMS text message to a phone number.

Note

AI Implementation Hint

Phone numbers in source.target and destinations[].target must be in E.164 format (e.g., +15551234567). If the user provides a local number like 555-1234, the LLM must normalize it to E.164 before invoking this tool. The source phone number must be a number owned by your VoIPBIN account (obtainable via GET /numbers).

When to use:

Caller explicitly requests a text: “text me”, “send me a text”, “SMS me”

Caller asks for information sent to their phone

Caller provides a phone number for messaging

Parameters:

{

"type": "object",

"properties": {

"run_llm": {

"type": "boolean",

"description": "Set true to confirm verbally after sending.",

"default": false

},

"source": {

"type": "object",

"properties": {

"type": { "type": "string", "enum": ["tel"] },

"target": { "type": "string", "description": "Source phone number (+E.164)" },

"target_name": { "type": "string", "description": "Display name (optional)" }

},

"required": ["type", "target"]

},

"destinations": {

"type": "array",

"items": {

"type": "object",

"properties": {

"type": { "type": "string", "enum": ["tel"] },

"target": { "type": "string", "description": "Destination phone number (+E.164)" },

"target_name": { "type": "string", "description": "Recipient name (optional)" }

},

"required": ["type", "target"]

}

},

"text": { "type": "string", "description": "SMS message content" }

},

"required": ["destinations", "text"]

}

stop_media

Stops media from a previous action that is currently playing on the call.

When to use:

AI has finished loading and needs to stop hold music or greeting

Previous flow action’s media playback should stop before AI speaks

Transitioning from pre-recorded media to live AI conversation

Parameters:

{

"type": "object",

"properties": {

"run_llm": {

"type": "boolean",

"description": "Set true to speak after stopping media.",

"default": false

}

}

}

Comparison with other stop tools:

+-------------+------------------------------------------+

| Tool | Effect |

+=============+==========================================+

| stop_media | Stop previous action's media playback |

| | AI conversation continues |

+-------------+------------------------------------------+

| stop_service| End AI conversation |

| | Flow continues to next action |

+-------------+------------------------------------------+

| stop_flow | Terminate everything |

| | Call ends, no further actions |

+-------------+------------------------------------------+

stop_service

Ends the AI conversation and proceeds to the next action in the flow.

When to use:

Caller says goodbye: “bye”, “goodbye”, “thanks, that’s all”

Caller indicates they’re done: “I’m all set”, “that’s everything”

AI has successfully completed its purpose (appointment booked, issue resolved)

Natural conversation conclusion

When NOT to use:

Caller is frustrated but still needs help (de-escalate instead)

Conversation has unresolved issues

Caller wants to end the entire call (use stop_flow instead)

Parameters:

{

"type": "object",

"properties": {}

}

Examples:

"Thanks, bye!" -> stop_service (natural end)

"I'm done here" -> stop_service (completion signal)

After booking appointment -> stop_service (task complete)

"Great, that's all I needed" -> stop_service

stop_flow

Immediately terminates the entire flow and call. Nothing executes after this.

When to use:

Caller explicitly wants to end everything: “hang up”, “end the call”, “disconnect”

Critical error requiring full termination

Emergency stop needed

When NOT to use:

Caller just wants to end AI conversation (use stop_service instead)

Caller says casual goodbye (use stop_service instead)

There are more flow actions that should execute after AI

Parameters:

{

"type": "object",

"properties": {}

}

Examples:

"Hang up now" -> stop_flow

"End this call immediately" -> stop_flow

"Terminate the call" -> stop_flow

set_variables

Saves key-value data to the flow context for later use by downstream actions.

Note

AI Implementation Hint

Variables set via set_variables are accessible in subsequent flow actions using the ${variable_name} syntax. Variable names are case-sensitive strings. Values are always stored as strings. These variables persist for the duration of the flow execution and are included in webhook events.

When to use:

Save information collected during conversation (name, account number, preferences)

Record conclusions (appointment time, issue category, resolution)

Store data needed by subsequent flow actions

Parameters:

{

"type": "object",

"properties": {

"run_llm": {

"type": "boolean",

"description": "Set true to continue conversation after saving.",

"default": false

},

"variables": {

"type": "object",

"description": "Key-value pairs to save",

"additionalProperties": { "type": "string" }

}

},

"required": ["variables"]

}

Examples:

"My name is John Smith" -> set_variables({"customer_name": "John Smith"})

"3pm works for me" -> set_variables({"appointment_time": "15:00"})

Issue categorized as billing -> set_variables({"issue_category": "billing"})

Account number provided -> set_variables({"account_number": "12345"})

get_variables

Retrieves previously saved variables from the flow context.

When to use:

Need context set earlier in the flow

Need information from previous actions (confirmation number, customer info)

Caller asks about something in saved context

Before performing an action requiring previously collected data

Parameters:

{

"type": "object",

"properties": {

"run_llm": {

"type": "boolean",

"description": "Set true to respond using retrieved data.",

"default": false

}

}

}

Examples:

Need customer name from earlier -> get_variables

"What was my confirmation?" -> get_variables

Before sending SMS -> get_variables (to get phone number)

get_aicall_messages

Retrieves message history from a specific AI call session.

Note

AI Implementation Hint

The aicall_id (UUID) must reference a completed or active AI call session. You can obtain this ID from the ${voipbin.aicall.id} flow variable during the current call, or from a previous call’s webhook event data. This tool is most useful for multi-call workflows where AI needs context from a prior conversation.

When to use:

Need message history from a different AI call (not current conversation)

Building summaries of past conversations

Caller asks about previous interactions: “what did we discuss last time?”

Referencing a specific past call by ID

When NOT to use:

Current conversation history is sufficient (already in AI context)

Need saved variables, not messages (use get_variables instead)

No specific aicall_id to query

Parameters:

{

"type": "object",

"properties": {

"run_llm": {

"type": "boolean",

"description": "Set true to respond based on retrieved messages.",

"default": false

},

"aicall_id": {

"type": "string",

"description": "UUID of the AI call to retrieve messages from"

}

},

"required": ["aicall_id"]

}

run_llm Parameter

Most tools include a run_llm parameter that controls whether the AI should generate a response after the tool executes.

+-------------------+--------------------------------------------------+

| run_llm = true | AI speaks after tool execution |

| | Example: "I've sent that to your email" |

+-------------------+--------------------------------------------------+

| run_llm = false | Tool executes silently |

| | Useful for chaining multiple tools |

+-------------------+--------------------------------------------------+

Default: Most tools default to run_llm = false for silent execution.

Tool Execution Flow

+-----------------------------------------------------------------+

| Tool Execution Architecture |

+-----------------------------------------------------------------+

Caller speaks Python Pipecat Go AIcallHandler

| | |

| "Transfer me to | |

| sales please" | |

+--------------------->| |

| | |

| STT converts |

| to text |

| | |

| LLM detects intent |

| function_call: connect_call |

| | |

| | HTTP POST |

| | /tool/execute |

| +--------------------------->|

| | |

| | Execute tool |

| | (call-manager) |

| | |

| | Tool result |

| |<---------------------------+

| | |

| LLM generates |

| response |

| | |

| TTS: "Connecting | |

| you to sales now" | |

|<---------------------+ |

| | |

Best Practices

1. Enable only needed tools

// Good: Only enable tools the AI actually needs

"tool_names": ["connect_call", "stop_service"]

// Avoid: Enabling all tools when only some are needed

"tool_names": ["all"]

2. Use stop_service vs stop_flow correctly

stop_service = Soft stop (AI ends, flow continues)

- User says "goodbye"

- Task completed successfully

stop_flow = Hard stop (everything ends)

- User says "hang up"

- Critical error

3. Clarify ambiguous requests

When a user says “send me that information,” the AI should ask:

"Would you like that by email or text message?"

This ensures the correct tool (send_email vs send_message) is used.

4. Use run_llm appropriately

// Silent operations (chaining tools)

"run_llm": false

// User-facing confirmations

"run_llm": true -> "I've connected you to the sales department"

Tutorial

Before using AI features, you need:

A valid authentication token (String). Obtain via

POST /auth/loginor use an accesskey fromGET /accesskeys.A source phone number in E.164 format (e.g.,

+15551234567). Obtain one owned by your account viaGET /numbers.A destination phone number in E.164 format or an internal extension.

An LLM provider API key (String). Obtain from your provider’s dashboard (e.g., OpenAI, Anthropic).

(Optional) A pre-created AI configuration (UUID). Create one via

POST /aisor use inline action settings.(Optional) A flow ID (UUID). Create one via

POST /flowsor obtain fromGET /flows.

Note

AI Implementation Hint

AI features use three external services: an LLM (e.g., OpenAI), a TTS provider (e.g., ElevenLabs), and an STT provider (e.g., Deepgram). Each incurs costs on both VoIPBIN credits and the external provider’s billing. Verify your VoIPBIN balance via GET /billing-accounts and your provider API key validity before creating AI calls.

Simple AI Voice Assistant

Create a basic AI voice assistant that answers questions during a call. The AI will listen to the user’s speech, process it, and respond using text-to-speech.

$ curl --location --request POST 'https://api.voipbin.net/v1.0/calls?token=<YOUR_AUTH_TOKEN>' \

--header 'Content-Type: application/json' \

--data-raw '{

"source": {

"type": "tel",

"target": "+15551234567"

},

"destinations": [

{

"type": "tel",

"target": "+15559876543"

}

],

"actions": [

{

"type": "answer"

},

{

"type": "ai",

"option": {

"initial_prompt": "You are a helpful customer service assistant. Answer questions politely and concisely.",

"language": "en-US",

"voice_type": "female"

}

}

]

}'

Response:

{

"id": "a1b2c3d4-e5f6-7890-abcd-ef1234567890", // Save this as call_id

"status": "dialing",

"source": {"type": "tel", "target": "+15551234567"},

"destination": {"type": "tel", "target": "+15559876543"},

"direction": "outgoing",

"tm_create": "2026-02-18T10:30:00Z"

}

This creates a call with an AI assistant that will:

Answer the incoming call

Listen to the user’s speech using STT (Speech-to-Text)

Process the input through the AI engine with the given prompt

Respond using TTS (Text-to-Speech)

AI Talk with Real-Time Conversation

Use AI Talk for more natural, low-latency conversations powered by ElevenLabs. This enables interruption detection where the AI stops speaking when the user starts talking.

$ curl --location --request POST 'https://api.voipbin.net/v1.0/calls?token=<YOUR_AUTH_TOKEN>' \

--header 'Content-Type: application/json' \

--data-raw '{

"source": {

"type": "tel",

"target": "+15551234567"

},

"destinations": [

{

"type": "tel",

"target": "+15559876543"

}

],

"actions": [

{

"type": "answer"

},

{

"type": "ai_talk",

"option": {

"initial_prompt": "You are an expert sales representative for VoIPBIN. Help customers understand our calling and messaging platform. Be enthusiastic but professional.",

"language": "en-US",

"voice_type": "male"

}

}

]

}'

Response:

{

"id": "b2c3d4e5-f6a7-8901-bcde-f12345678901", // Save this as call_id

"status": "dialing",

"source": {"type": "tel", "target": "+15551234567"},

"destination": {"type": "tel", "target": "+15559876543"},

"direction": "outgoing",

"tm_create": "2026-02-18T10:31:00Z"

}

AI Talk provides:

Interruption Detection: Stops speaking when user talks

Low Latency: Streams responses in chunks for faster perceived response time

Natural Voice: Uses ElevenLabs for high-quality voice output

Context Retention: Remembers previous conversation exchanges

Note

AI Implementation Hint

The ai_talk action type (not ai) enables real-time voice interaction with interruption detection. Use ai_talk for live conversational AI. The older ai action type uses a simpler request-response pattern without interruption support and is recommended only for basic Q&A use cases.

AI with Custom Voice ID

Customize the AI voice by specifying an ElevenLabs Voice ID using variables.

$ curl --location --request POST 'https://api.voipbin.net/v1.0/calls?token=<YOUR_AUTH_TOKEN>' \

--header 'Content-Type: application/json' \

--data-raw '{

"source": {

"type": "tel",

"target": "+15551234567"

},

"destinations": [

{

"type": "tel",

"target": "+15559876543"

}

],

"actions": [

{

"type": "answer"

},

{

"type": "variable_set",

"option": {

"key": "voipbin.tts.elevenlabs.voice_id",

"value": "21m00Tcm4TlvDq8ikWAM"

}

},

{

"type": "ai_talk",

"option": {

"initial_prompt": "You are a friendly receptionist. Greet callers warmly and help them with their inquiries.",

"language": "en-US"

}

}

]

}'

See Built-in ElevenLabs Voice IDs for available voice options.

AI Summary for Call Transcription

Generate an AI-powered summary of a call transcription. This is useful for post-call analysis and record-keeping.

$ curl --location --request POST 'https://api.voipbin.net/v1.0/calls?token=<YOUR_AUTH_TOKEN>' \

--header 'Content-Type: application/json' \

--data-raw '{

"source": {

"type": "tel",

"target": "+15551234567"

},

"destinations": [

{

"type": "tel",

"target": "+15559876543"

}

],

"actions": [

{

"type": "answer"

},

{

"type": "transcribe_start",

"option": {

"language": "en-US"

}

},

{

"type": "talk",

"option": {

"text": "Hello! This call is being transcribed and summarized. Please tell me about your experience with our service.",

"language": "en-US"

}

},

{

"type": "sleep",

"option": {

"duration": 30000

}

},

{

"type": "ai_summary",

"option": {

"source_type": "transcribe",

"source_id": "${voipbin.transcribe.id}"

}

},

{

"type": "talk",

"option": {

"text": "Thank you for your feedback. We have recorded and summarized your call.",

"language": "en-US"

}

}

]

}'

The AI summary will:

- Process the transcription from transcribe_start

- Generate a structured summary of key points

- Store the summary in ${voipbin.ai_summary.content}

- Can be accessed via webhook or API after the call

Real-Time AI Summary

Get AI summaries while the call is still active. Useful for live call monitoring and agent assistance.

$ curl --location --request POST 'https://api.voipbin.net/v1.0/calls?token=<YOUR_AUTH_TOKEN>' \

--header 'Content-Type: application/json' \

--data-raw '{

"source": {

"type": "tel",

"target": "+15551234567"

},

"destinations": [

{

"type": "tel",

"target": "+15559876543"

}

],

"actions": [

{

"type": "answer"

},

{

"type": "transcribe_start",

"option": {

"language": "en-US",

"real_time": true

}

},

{

"type": "ai_summary",

"option": {

"source_type": "call",

"source_id": "${voipbin.call.id}",

"real_time": true

}

},

{

"type": "connect",

"option": {

"source": {

"type": "tel",

"target": "+15551234567"

},

"destinations": [

{

"type": "tel",

"target": "+15551111111"

}

]

}

}

]

}'

Real-time summaries provide: - Live Updates: Summary updates as conversation progresses - Agent Assistance: Provides context to agents joining mid-call - Call Monitoring: Enables supervisors to quickly understand ongoing calls

Best Practices

Initial Prompt Design: - Be specific about the AI’s role and behavior - Include constraints (e.g., “Keep responses under 30 seconds”) - Define the tone (professional, friendly, technical, etc.)

Language Support:

- AI supports multiple languages (see supported languages)

- Match the language parameter with the user’s expected language

- AI can detect and respond in multiple languages if not constrained

Context Retention: - AI remembers conversation history within the same call - Variables set during the call are available to AI - Use context to build multi-turn conversations

Error Handling: - Always include fallback actions after AI actions - Handle cases where AI may not understand the input - Provide clear instructions to users about what they can ask

Troubleshooting

- 400 Bad Request:

Cause: Invalid

engine_modelformat or missing required action fields.Fix: Verify

engine_modeluses<provider>.<model>format (e.g.,openai.gpt-4o). Ensureinitial_promptis provided.

- 402 Payment Required:

Cause: Insufficient VoIPBIN account balance.

Fix: Check balance via

GET /billing-accounts. Top up before retrying.

- AI not responding during call:

Cause: LLM provider API key is invalid or rate-limited.

Fix: Verify the

engine_keyin your AI configuration. Check the provider’s status page and rate limits.

- No audio from AI:

Cause: TTS provider credentials are invalid or the voice ID does not exist.

Fix: Verify

tts_typeandtts_voice_id. Try using a default voice (omittts_voice_id).

For more details on AI features and configuration, see AI Overview.